The Anthropic’s Claude 3 household of fashions, out there on Amazon Bedrock, affords multimodal capabilities that allow the processing of photographs and textual content. This functionality opens up modern avenues for picture understanding, whereby Anthropic’s Claude 3 fashions can analyze visible info at the side of textual knowledge, facilitating extra complete and contextual interpretations. By making the most of its multimodal prowess, we will ask the mannequin questions like “What objects are within the picture, and the way are they comparatively positioned to one another?” We are able to additionally acquire an understanding of information introduced in charts and graphs by asking questions associated to enterprise intelligence (BI) duties, corresponding to “What’s the gross sales pattern for 2023 for firm A within the enterprise market?” These are just a few examples of the extra richness Anthropic’s Claude 3 brings to generative synthetic intelligence (AI) interactions.

Architecting particular AWS Cloud options includes creating diagrams that present relationships and interactions between completely different companies. As a substitute of constructing the code manually, you should use Anthropic’s Claude 3’s picture evaluation capabilities to generate AWS CloudFormation templates by passing an structure diagram as enter.

On this put up, we discover some methods you should use Anthropic’s Claude 3 Sonnet’s imaginative and prescient capabilities to speed up the method of transferring from structure to the prototype stage of an answer.

Use circumstances for structure to code

The next are related use circumstances for this answer:

- Changing whiteboarding classes to AWS infrastructure – To shortly prototype your designs, you possibly can take the structure diagrams created throughout whiteboarding classes and generate the primary draft of a CloudFormation template. You too can iterate over the CloudFormation template to develop a well-architected answer that meets all of your necessities.

- Fast deployment of structure diagrams – You possibly can generate boilerplate CloudFormation templates by utilizing structure diagrams you discover on the internet. This lets you experiment shortly with new designs.

- Streamlined AWS infrastructure design by way of collaborative diagramming – You would possibly draw structure diagrams on a diagramming device throughout an all-hands assembly. These uncooked diagrams can generate boilerplate CloudFormation templates, shortly resulting in actionable steps whereas rushing up collaboration and rising assembly worth.

Answer overview

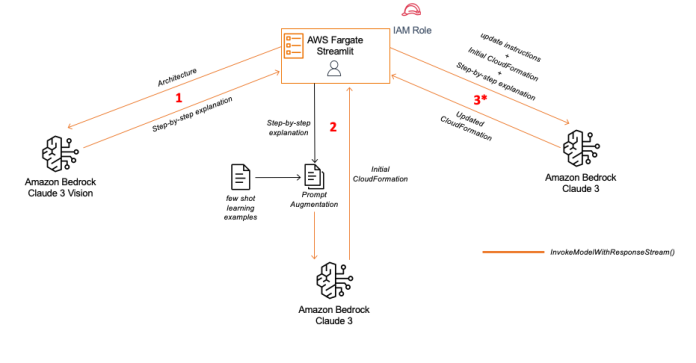

To exhibit the answer, we use Streamlit to supply an interface for diagrams and prompts. Amazon Bedrock invokes the Anthropic’s Claude 3 Sonnet mannequin, which offers multimodal capabilities. AWS Fargate is the compute engine for net utility. The next diagram illustrates the step-by-step course of.

The workflow consists of the next steps:

- The consumer uploads an structure picture (JPEG or PNG) on the Streamlit utility, invoking the Amazon Bedrock API to generate a step-by-step clarification of the structure utilizing the Anthropic’s Claude 3 Sonnet mannequin.

- The Anthropic’s Claude 3 Sonnet mannequin is invoked utilizing a step-by-step clarification and few-shot studying examples to generate the preliminary CloudFormation code. The few-shot studying instance consists of three CloudFormation templates; this helps the mannequin perceive writing practices related to CloudFormation code.

- The consumer manually offers directions utilizing the chat interface to replace the preliminary CloudFormation code.

*Steps 1 and a couple of are executed as soon as when structure diagram is uploaded. To set off adjustments to the AWS CloudFormation code (step 3) present replace directions from the Streamlit app

The CloudFormation templates generated by the online utility are meant for inspiration functions and never for production-level functions. It’s the accountability of a developer to check and confirm the CloudFormation template in line with safety tips.

Few-shot Prompting

To assist Anthropic’s Claude 3 Sonnet perceive the practices of writing CloudFormation code, we use few-shot prompting by offering three CloudFormation templates as reference examples within the immediate. Exposing Anthropic’s Claude 3 Sonnet to a number of CloudFormation templates will permit it to research and study from the construction, useful resource definitions, parameter configurations, and different important parts persistently applied throughout your group’s templates. This permits Anthropic’s Claude 3 Sonnet to understand your staff’s coding conventions, naming conventions, and organizational patterns when producing CloudFormation templates. The next examples used for few-shot studying could be discovered within the GitHub repo.

Few-shot prompting instance 1

Few-shot prompting instance 2

Few-shot prompting instance 3

Moreover, Anthropic’s Claude 3 Sonnet can observe how completely different assets and companies are configured and built-in throughout the CloudFormation templates by way of few-shot prompting. It can acquire insights into how you can automate the deployment and administration of varied AWS assets, corresponding to Amazon Easy Storage Service (Amazon S3), AWS Lambda, Amazon DynamoDB, and AWS Step Features.

Inference parameters are preset, however they are often modified from the online utility if desired. We suggest experimenting with varied mixtures of those parameters. By default, we set the temperature to zero to scale back the variability of outputs and create centered, syntactically right code.

Stipulations

To entry the Anthropic’s Claude 3 Sonnet basis mannequin (FM), you should request entry by way of the Amazon Bedrock console. For directions, see Handle entry to Amazon Bedrock basis fashions. After requesting entry to Anthropic’s Claude 3 Sonnet, you possibly can deploy the next improvement.yaml CloudFormation template to provision the infrastructure for the demo. For directions on how you can deploy this pattern, discuss with the GitHub repo. Use the next desk to launch the CloudFormation template to shortly deploy the pattern in both us-east-1 or us-west-2.

When deploying the template, you could have the choice to specify the Amazon Bedrock mannequin ID you wish to use for inference. This flexibility lets you select the mannequin that most accurately fits your wants. By default, the template makes use of the Anthropic’s Claude 3 Sonnet mannequin, famend for its distinctive efficiency. Nonetheless, if you happen to favor to make use of a special mannequin, you possibly can seamlessly move its Amazon Bedrock mannequin ID as a parameter throughout deployment. Confirm that you’ve got requested entry to the specified mannequin beforehand and that the mannequin possesses the mandatory imaginative and prescient capabilities required on your particular use case.

After you launch the CloudFormation stack, navigate to the stack’s Outputs tab on the AWS CloudFormation console and gather the Amazon CloudFront URL. Enter the URL in your browser to view the online utility.

On this put up, we talk about CloudFormation template era for 3 completely different samples. Yow will discover the pattern structure diagrams within the GitHub repo. These samples are just like the few-shot studying examples, which is intentional. As an enhancement to this structure, you possibly can make use of a Retrieval Augmented Technology (RAG)-based method to retrieve related CloudFormation templates from a information base to dynamically increase the immediate.

Because of the non-deterministic conduct of the massive language mannequin (LLM), you won’t get the identical response as proven on this put up.

Let’s generate CloudFormation templates for the next pattern structure diagram.

Importing the previous structure diagram to the online utility generates a step-by-step clarification of the diagram utilizing Anthropic’s Claude 3 Sonnet’s imaginative and prescient capabilities.

Let’s analyze the step-by-step clarification. The generated response is split into three components:

- The context explains what the structure diagram depicts.

- The structure diagram’s stream provides the order during which AWS companies are invoked and their relationship with one another.

- We get a abstract of the whole generated response.

Within the following step-by-step clarification, we see a couple of highlighted errors.

The step-by-step clarification is augmented with few-shot studying examples to develop an preliminary CloudFormation template. Let’s analyze the preliminary CloudFormation template:

After analyzing the CloudFormation template, we see that the Lambda code refers to an Amazon Easy Notification Service (Amazon SNS) subject utilizing !Ref SNSTopic, which isn’t legitimate. We additionally wish to add further performance to the template. First, we wish to filter the Amazon S3 notification configuration to invoke Lambda solely when *.csv recordsdata are uploaded. Second, we wish to add metadata to the CloudFormation template. To do that, we use the chat interface to provide the next replace directions to the online utility:

The up to date CloudFormation template is as follows:

Further examples

Now we have supplied two extra pattern diagrams, their related CloudFormation code generated by Anthropic’s Claude 3 Sonnet, and the prompts used to create them. You possibly can see how diagrams in varied kinds, from digital to hand-drawn, or some mixture, can be utilized. The tip-to-end evaluation of those samples could be discovered at pattern 2 and pattern 3 on the GitHub repo.

Greatest practices for structure to code

Within the demonstrated use case, you possibly can observe how properly the Anthropic’s Claude 3 Sonnet mannequin might pull particulars and relationships between companies from an structure picture. The next are some methods you possibly can enhance the efficiency of Anthropic’s Claude on this use case:

- Implement a multimodal RAG method to boost the appliance’s skill to deal with a greater diversity of complicated structure diagrams, as a result of the present implementation is proscribed to diagrams just like the supplied static examples.

- Improve the structure diagrams by incorporating visible cues and options, corresponding to labeling companies, indicating orchestration hierarchy ranges, grouping associated companies on the similar stage, enclosing companies inside clear bins, and labeling arrows to symbolize the stream between companies. These additions will help in higher understanding and decoding the diagrams.

- If the appliance generates an invalid CloudFormation template, present the error as replace directions. It will assist the mannequin perceive the error and make a correction.

- Use Anthropic’s Claude 3 Opus or Anthropic’s Claude 3.5 Sonnet for better efficiency on lengthy contexts in an effort to assist near-perfect recall

- With cautious design and administration, orchestrate agentic workflows by utilizing Brokers for Amazon Bedrock. This allows you to incorporate self-reflection, device use, and planning inside your workflow to generate extra related CloudFormation templates.

- Use Amazon Bedrock Immediate Flows to speed up the creation, testing, and deployment of workflows by way of an intuitive visible interface. This may scale back improvement effort and speed up workflow testing.

Clear up

To wash up the assets used on this demo, full the next steps:

- On the AWS CloudFormation console, select Stacks within the navigation pane.

- Choose the deployed yaml

improvement.yamlstack and select Delete.

Conclusion

With the sample demonstrated with Anthropic’s Claude 3 Sonnet, builders can effortlessly translate their architectural visions into actuality by merely sketching their desired cloud options. Anthropic’s Claude 3 Sonnet’s superior picture understanding capabilities will analyze these diagrams and generate boilerplate CloudFormation code, minimizing the necessity for preliminary complicated coding duties. This visually pushed method empowers builders from a wide range of ability ranges, fostering collaboration, fast prototyping, and accelerated innovation.

You possibly can examine different patterns, corresponding to together with RAG and agentic workflows, to enhance the accuracy of code era. You too can discover customizing the LLM by fine-tuning it to jot down CloudFormation code with better flexibility.

Now that you’ve got seen Anthropic’s Claude 3 Sonnet in motion, attempt designing your personal structure diagrams utilizing a number of the greatest practices to take your prototyping to the following stage.

For added assets, discuss with the :

In regards to the Authors

Eashan Kaushik is an Affiliate Options Architect at Amazon Net Providers. He’s pushed by creating cutting-edge generative AI options whereas prioritizing a customer-centric method to his work. Earlier than this position, he obtained an MS in Pc Science from NYU Tandon Faculty of Engineering. Exterior of labor, he enjoys sports activities, lifting, and working marathons.

Eashan Kaushik is an Affiliate Options Architect at Amazon Net Providers. He’s pushed by creating cutting-edge generative AI options whereas prioritizing a customer-centric method to his work. Earlier than this position, he obtained an MS in Pc Science from NYU Tandon Faculty of Engineering. Exterior of labor, he enjoys sports activities, lifting, and working marathons.

Chris Pecora is a Generative AI Knowledge Scientist at Amazon Net Providers. He’s obsessed with constructing modern merchandise and options whereas additionally specializing in customer-obsessed science. When not working experiments and maintaining with the newest developments in generative AI, he loves spending time together with his youngsters.

Chris Pecora is a Generative AI Knowledge Scientist at Amazon Net Providers. He’s obsessed with constructing modern merchandise and options whereas additionally specializing in customer-obsessed science. When not working experiments and maintaining with the newest developments in generative AI, he loves spending time together with his youngsters.