Giant enterprises are constructing methods to harness the ability of generative synthetic intelligence (AI) throughout their organizations. Nevertheless, scaling up generative AI and making adoption simpler for various traces of companies (LOBs) comes with challenges round ensuring knowledge privateness and safety, authorized, compliance, and operational complexities are ruled on an organizational stage.

The AWS Properly-Architected Framework was developed to permit organizations to deal with the challenges of utilizing Cloud in a big organizations leveraging the perfect practices and guides developed by AWS throughout hundreds of buyer engagements. AI introduces some distinctive challenges as nicely, together with managing bias, mental property, immediate security, and knowledge integrity that are crucial concerns when deploying generative AI options at scale. As that is an rising space, finest practices, sensible steering, and design patterns are troublesome to seek out in an simply consumable foundation. On this put up, we’ll use the AWS Properly-Architected Framework operational excellence pillar as a baseline to share practices/tips that now we have developed as a part of real-world tasks to mean you can use AI safely at scale.

Amazon Bedrock performs a pivotal function on this endeavor. It’s a totally managed service that gives a alternative of high-performing basis fashions (FMs) from main AI firms like Anthropic, Cohere, Meta, Mistral AI, and Amazon by a single API, together with a broad set of capabilities to construct generative AI purposes with safety, privateness, and accountable AI. You may securely combine and deploy generative AI capabilities into your purposes utilizing companies resembling AWS Lambda, enabling seamless knowledge administration, monitoring, and compliance (for extra particulars, see Monitoring and observability). With Amazon Bedrock, enterprises can obtain the next:

- Scalability – Scale generative AI purposes throughout completely different LOBs

- Safety and compliance – Implement knowledge privateness, safety, and compliance with {industry} requirements and rules

- Operational effectivity – Streamline operations with built-in instruments for monitoring, logging, and automation, aligned with the AWS Properly-Architected Framework

- Innovation – Entry cutting-edge AI fashions and frequently enhance them with real-time knowledge and suggestions

This strategy allows enterprises to deploy generative AI at scale whereas sustaining operational excellence, finally driving innovation and effectivity throughout their organizations.

What’s completely different about working generative AI workloads and options?

The operational excellence pillar of the Properly-Architected Framework helps your group to focus extra of their time on constructing new options that profit clients, in our case the event of GENAI options in a protected and scalable method. Nevertheless, if we had been to use a generative AI lens, we would wish to deal with the intricate challenges and alternatives arising from its revolutionary nature, encompassing the next points:

- Complexity might be unpredictable because of the capacity of enormous language fashions (LLMs) to generate new content material

- Potential mental property infringement is a priority because of the lack of transparency within the mannequin coaching knowledge

- Low accuracy in generative AI can create incorrect or controversial content material

- Useful resource utilization requires a particular working mannequin to satisfy the substantial computational sources required for coaching and immediate and token sizes

- Steady studying necessitates further knowledge annotation and curation methods

- Compliance can be a quickly evolving space, the place knowledge governance turns into extra nuanced and complicated, and poses challenges

- Integration with legacy programs requires cautious concerns of compatibility, knowledge circulation between programs, and potential efficiency impacts.

Any generative AI lens subsequently wants to mix the next components, every with various ranges of prescription and enforcement, to deal with these challenges and supply the idea for accountable AI utilization:

- Coverage – The system of ideas to information choices

- Guardrails – The foundations that create boundaries to maintain you throughout the coverage

- Mechanisms – The method and instruments

AWS launched Amazon Bedrock Guardrails as a approach to forestall dangerous responses from the LLMs, offering an extra layer of safeguards whatever the underlying FM, the place to begin for accountable AI. Nevertheless, a extra holistic organizational strategy is essential as a result of generative AI practitioners, knowledge scientists, or builders can doubtlessly use a variety of applied sciences, fashions, and datasets to bypass the established controls.

As cloud adoption has matured for extra conventional IT workloads and purposes, the necessity to assist builders choose the proper cloud answer that minimizes company danger and simplifies the developer expertise has emerged. That is sometimes called platform engineering and might be neatly summarized by the mantra “You (the developer) construct and check, and we (the platform engineering group) do all the remainder!”.

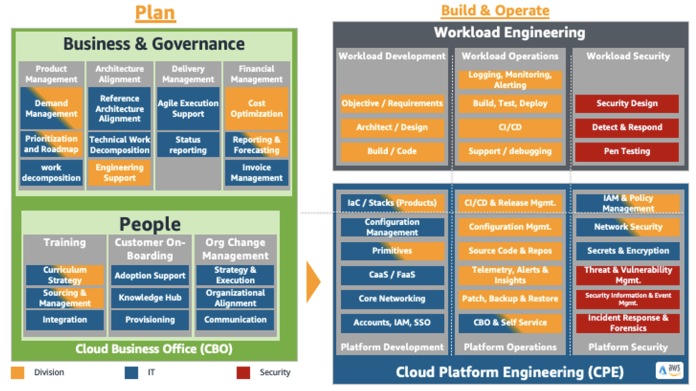

A mature cloud working mannequin will sometimes include a enterprise workplace able to producing demand for a cloud and a platform engineering group that present supporting’s companies resembling Safety or Devops (together with CI/CD, Observability and many others.) that assist this demand, that is illustrated within the diagram proven subsequent.

This strategy, when utilized to generative AI options, these companies are expanded to assist particular AI or machine studying (ML) platform configuration for instance including a MLOps or immediate security capabilities.

The place to start out?

We begin this put up by reviewing the foundational operational components outlined by the operational excellence pillar particularly

- Set up groups round enterprise outcomes: The flexibility of a group to attain enterprise outcomes comes from management imaginative and prescient, efficient operations, and a business-aligned working mannequin. Management ought to be totally invested and dedicated to a CloudOps transformation with an acceptable cloud working mannequin that incentivizes groups to function in essentially the most environment friendly manner and meet enterprise outcomes. The correct working mannequin makes use of individuals, course of, and expertise capabilities to scale, optimize for productiveness, and differentiate by agility, responsiveness, and adaptation. The group’s long-term imaginative and prescient is translated into targets which can be communicated throughout the enterprise to stakeholders and shoppers of your cloud companies. Objectives and operational KPIs are aligned in any respect ranges. This apply sustains the long-term worth derived from implementing the next design ideas.

- Implement observability for actionable insights: Acquire a complete understanding of workload behaviour, efficiency, reliability, price, and well being. Set up key efficiency indicators (KPIs) and leverage observability telemetry to make knowledgeable choices and take immediate motion when enterprise outcomes are in danger. Proactively enhance efficiency, reliability, and value primarily based on actionable observability knowledge.

- Safely automate the place doable: Within the cloud, you possibly can apply the identical engineering self-discipline that you simply use for utility code to your complete setting. You may outline your complete workload and its operations (purposes, infrastructure, configuration, and procedures) as code, and replace it. You may then automate your workload’s operations by initiating them in response to occasions. Within the cloud, you possibly can make use of automation security by configuring guardrails, together with price management, error thresholds, and approvals. Via efficient automation, you possibly can obtain constant responses to occasions, restrict human error, and cut back operator toil.

- Make frequent, small, reversible modifications: Design workloads which can be scalable and loosely coupled to allow elements to be up to date frequently. Automated deployment methods along with smaller, incremental modifications reduces the blast radius and permits for quicker reversal when failures happen. This will increase confidence to ship useful modifications to your workload whereas sustaining high quality and adapting shortly to modifications in market circumstances.

- Refine operations procedures ceaselessly: As you evolve your workloads, evolve your operations appropriately. As you utilize operations procedures, search for alternatives to enhance them. Maintain common critiques and validate that every one procedures are efficient and that groups are acquainted with them. The place gaps are recognized, replace procedures accordingly. Talk procedural updates to all stakeholders and groups. Gamify your operations to share finest practices and educate groups.

- Anticipate failure: Maximize operational success by driving failure situations to know the workload’s danger profile and its influence on your enterprise outcomes. Take a look at the effectiveness of your procedures and your group’s response in opposition to these simulated failures. Make knowledgeable choices to handle open dangers which can be recognized by your testing.

- Study from all operational occasions and metrics: Drive enchancment by classes realized from all operational occasions and failures. Share what’s realized throughout groups and thru your complete group. Learnings ought to spotlight knowledge and anecdotes on how operations contribute to enterprise outcomes.

- Use managed companies: Cut back operational burden by utilizing AWS managed companies the place doable. Construct operational procedures round interactions with these companies.

A generative AI platform group must initially concentrate on as they transition generative options from a proof of idea or prototype part to a production-ready answer. Particularly, we’ll cowl how one can safely develop, deploy, and monitor fashions, mitigating operational and compliance dangers, thereby lowering the friction in adopting AI at scale and for manufacturing use.

We initially concentrate on the next design ideas:

- Implement observability for actionable insights

- Safely automate the place doable

- Make frequent, small, reversible modifications

- Refine operations procedures ceaselessly

- Study from all operational occasions and metrics

- Use managed companies

Within the following sections, we clarify this utilizing an structure diagram whereas diving into the perfect practices of the management pillar.

Present management by transparency of fashions, guardrails, and prices utilizing metrics, logs, and traces

The management pillar of the generative AI framework focuses on observability, price administration, and governance, ensuring enterprises can deploy and function their generative AI options securely and effectively. The subsequent diagram illustrates the important thing elements of this pillar.

Observability

Organising observability measures lays the foundations for the opposite two elements, particularly FinOps and governance. Observability is essential for monitoring the efficiency, reliability, and cost-efficiency of generative AI options. By utilizing AWS companies resembling Amazon CloudWatch, AWS CloudTrail, and Amazon OpenSearch Service, enterprises can achieve visibility into mannequin metrics, utilization patterns, and potential points, enabling proactive administration and optimization.

Amazon Bedrock is appropriate with sturdy observability options to watch and handle ML fashions and purposes. Key metrics built-in with CloudWatch embody invocation counts, latency, consumer and server errors, throttles, enter and output token counts, and extra (for extra particulars, see Monitor Amazon Bedrock with Amazon CloudWatch). You may as well use Amazon EventBridge to watch occasions associated to Amazon Bedrock. This lets you create guidelines that invoke particular actions when sure occasions happen, enhancing the automation and responsiveness of your observability setup (for extra particulars, see Monitor Amazon Bedrock). CloudTrail can log all API calls made to Amazon Bedrock by a consumer, function, or AWS service in an AWS setting. That is notably helpful for monitoring entry to delicate sources resembling personally identifiable info (PII), mannequin updates, and different crucial actions, enabling enterprises to keep up a strong audit path and compliance. To study extra, see Log Amazon Bedrock API calls utilizing AWS CloudTrail.

Amazon Bedrock helps the metrics and telemetry wanted for implementing an observability maturity mannequin for LLMs, which incorporates the next:

- Capturing and analyzing LLM-specific metrics resembling mannequin efficiency, immediate properties, and value metrics by CloudWatch

- Implementing alerts and incident administration tailor-made to LLM-related points

- Offering safety compliance and sturdy monitoring mechanisms, as a result of Amazon Bedrock is in scope for widespread compliance requirements and affords automated abuse detection mechanisms

- Utilizing CloudWatch and CloudTrail for anomaly detection, utilization and prices forecasting, optimizing efficiency, and useful resource utilization

- Utilizing AWS forecasting companies for higher useful resource planning and value administration

CloudWatch offers a unified monitoring and observability service that collects logs, metrics, and occasions from varied AWS companies and on-premises sources. This permits enterprises to trace key efficiency indicators (KPIs) for his or her generative AI fashions, resembling I/O volumes, latency, and error charges. You should utilize CloudWatch dashboards to create customized visualizations and alerts, so groups are shortly notified of any anomalies or efficiency degradation.

For extra superior observability necessities, enterprises can use Amazon OpenSearch Service, a totally managed service for deploying, working, and scaling OpenSearch and Kibana. Opensearch Dashboards offers highly effective search and analytical capabilities, permitting groups to dive deeper into generative AI mannequin conduct, consumer interactions, and system-wide metrics.

Moreover, you possibly can allow mannequin invocation logging to gather invocation logs, full request response knowledge, and metadata for all Amazon Bedrock mannequin API invocations in your AWS account. Earlier than you possibly can allow invocation logging, you could arrange an Amazon Easy Storage Service (Amazon S3) or CloudWatch Logs vacation spot. You may allow invocation logging by both the AWS Administration Console or the API. By default, logging is disabled.

Value administration and optimization (FinOps)

Generative AI options can shortly scale and eat vital cloud sources, and a strong FinOps apply is crucial. With companies like AWS Value Explorer and AWS Budgets, enterprises can observe their utilization and optimize their generative AI spending, attaining cost-effective deployment and scaling.

Value Explorer offers detailed price evaluation and forecasting capabilities, enabling you to know your tenant-related expenditures, establish price drivers, and plan for future progress. Groups can create customized price allocation experiences, set customized budgets utilizing AWS budgets and alerts, and discover price tendencies over time.

Analyzing the fee and efficiency of generative AI fashions is essential for making knowledgeable choices about mannequin deployment and optimization. EventBridge, CloudTrail, and CloudWatch present the required instruments to trace and analyze these metrics, serving to enterprises make data-driven choices. With this info, you possibly can establish optimization alternatives, resembling cutting down under-utilized sources.

With EventBridge, you possibly can configure Amazon Bedrock to reply routinely to standing change occasions in Amazon Bedrock. This allows you to deal with API price restrict points, API updates, and discount in further compute sources. For extra particulars, see Monitor Amazon Bedrock occasions in Amazon EventBridge.

As mentioned in earlier part, CloudWatch can monitor Amazon Bedrock to gather uncooked knowledge and course of it into readable, close to real-time price metrics. You may graph the metrics utilizing the CloudWatch console. You may as well set alarms that look ahead to sure thresholds, and ship notifications or take actions when values exceed these thresholds. For extra info, see Monitor Amazon Bedrock with Amazon CloudWatch.

Governance

Implementation of sturdy governance measures, together with steady analysis and multi-layered guardrails, is prime for the accountable and efficient deployment of generative AI options in enterprise environments. Let’s have a look at them one after the other:

- Efficiency monitoring and analysis – Repeatedly evaluating the efficiency, security, and compliance of generative AI fashions is crucial. You may obtain this in a number of methods:

- Enterprises can use AWS companies like Amazon SageMaker Mannequin Monitor and Guardrails for Amazon Bedrock, or Amazon Comprehend to watch mannequin conduct, detect drifts, and ensure generative AI options are performing as anticipated (or higher) and adhering to organizational insurance policies.

- You may deploy open-source analysis metrics like RAGAS as customized metrics to verify LLM responses are grounded, mitigate bias, and stop hallucinations.

- Mannequin analysis jobs mean you can examine mannequin outputs and select the best-suited mannequin on your use case. The job might be automated primarily based on a floor reality, or you could possibly use people to herald experience on the matter. You may as well use FMs from Amazon Bedrock to judge your purposes. To study extra about this strategy, seek advice from Consider the reliability of Retrieval Augmented Technology purposes utilizing Amazon Bedrock.

- Guardrails – Generative AI options ought to embody sturdy, multi-level guardrails to implement accountable AI and oversight:

- First, you want guardrails across the LLM mannequin to mitigate dangers round bias and safeguard the appliance with accountable AI insurance policies. This may be completed by Guardrails for Amazon Bedrock to arrange customized guardrails round a mannequin (FM or fine-tuned) for configuring denied matters, content material filters, and blocked messaging.

- The second stage is to set guardrails across the framework for every use case. This contains implementing entry controls, knowledge governance insurance policies, and proactive monitoring and alerting to verify delicate info is correctly secured and monitored. For instance, you need to use AWS knowledge analytics companies resembling Amazon Redshift for knowledge warehousing, AWS Glue for knowledge integration, and Amazon QuickSight for enterprise intelligence (BI).

- Compliance measures – Enterprises must arrange a strong compliance framework to satisfy regulatory necessities and {industry} requirements resembling GDPR, CCPA, or industry-specific requirements. This helps make sure that generative AI options stay safe, compliant, and environment friendly in dealing with delicate info throughout completely different use circumstances. This strategy minimizes the danger of knowledge breaches or unauthorized knowledge entry, thereby defending the integrity and confidentiality of crucial knowledge belongings. Enterprises can take the next organization-level actions to create a complete governance construction:

- Set up a transparent incident response plan for addressing compliance breaches or AI system malfunctions.

- Conduct periodic compliance assessments and third-party audits to establish and deal with potential dangers or violations.

- Present ongoing coaching to workers on compliance necessities and finest practices in AI governance.

- Mannequin transparency – Though attaining full transparency in generative AI fashions stays difficult, organizations can take a number of steps to boost mannequin transparency and explainability:

- Present mannequin playing cards on the mannequin’s meant use, efficiency, capabilities, and potential biases.

- Ask the mannequin to self-explain, that means present explanations for their very own choices. This may also be set in a fancy system—for instance, brokers may carry out multi-step planning and enhance by self-explanation.

Automate mannequin lifecycle administration with LLMOps or FMOps

Implementing LLMOps is essential for effectively managing the lifecycle of generative AI fashions at scale. To understand the idea of LLMOps, a subset of FMOps, and the important thing differentiators in comparison with MLOps, see FMOps/LLMOps: Operationalize generative AI and variations with MLOps. In that put up, you possibly can study extra concerning the developmental lifecycle of a generative AI utility and the extra abilities, processes, and applied sciences wanted to operationalize generative AI purposes.

Handle knowledge by customary strategies of knowledge ingestion and use

Enriching LLMs with new knowledge is crucial for LLMs to supply extra contextual solutions with out the necessity for intensive fine-tuning or the overhead of constructing a particular company LLM. Managing knowledge ingestion, extraction, transformation, cataloging, and governance is a fancy, time-consuming course of that should align with company knowledge insurance policies and governance frameworks.

AWS offers a number of companies to assist this; the next diagram illustrates these at a excessive stage. For a extra detailed description, see Scaling AI and Machine Studying Workloads with Ray on AWS and Construct a RAG knowledge ingestion pipeline for giant scale ML workloads.

This workflow contains the next steps:

- Knowledge might be securely transferred to AWS utilizing both customized or present instruments or the AWS Switch You should utilize AWS Identification and Entry Administration (IAM) and AWS PrivateLink to regulate and safe entry to knowledge and generative AI sources, ensuring knowledge stays throughout the group’s boundaries and complies with the related rules.

- When the info is in Amazon S3, you need to use AWS Glue to extract and remodel knowledge (for instance, into Parquet format) and retailer metadata concerning the ingested knowledge, facilitating knowledge governance and cataloging.

- The third part is the GPU cluster, which may doubtlessly be a Ray You may make use of varied orchestration engines, resembling AWS Step Capabilities, Amazon SageMaker Pipelines, or AWS Batch, to run the roles (or create pipelines) to create embeddings and ingest the info into a knowledge retailer or vector retailer.

- Embeddings might be saved in a vector retailer resembling OpenSearch, enabling environment friendly retrieval and querying. Alternatively, you need to use an answer resembling Data Bases for Amazon Bedrock to ingest knowledge from Amazon S3 or different knowledge sources, enabling seamless integration with generative AI options.

- You should utilize Amazon DataZone to handle entry management to the uncooked knowledge saved in Amazon S3 and the vector retailer, implementing role-based or fine-grained entry management for knowledge governance.

- For circumstances the place you want a semantic understanding of your knowledge, you need to use Amazon Kendra for clever enterprise search. Amazon Kendra has inbuilt ML capabilities and is simple to combine with varied knowledge sources like S3, making it adaptable for various organizational wants.

The selection of which elements to make use of will rely upon the particular necessities of the answer, however a constant answer ought to exist for all knowledge administration to be codified into blueprints (mentioned within the following part).

Present managed infrastructure patterns and blueprints for fashions, immediate catalogs, APIs, and entry management tips

There are a variety of the way to construct and deploy a generative AI answer. AWS affords key companies resembling Amazon Bedrock, Amazon Kendra, OpenSearch Service, and extra, which might be configured to assist a number of generative AI use circumstances, resembling textual content summarization, Retrieval Augmented Technology (RAG), and others.

The only manner is to permit every group who wants to make use of generative AI to construct their very own customized answer on AWS, however it will inevitably enhance prices and trigger organization-wide irregularities. A extra scalable choice is to have a centralized group construct customary generative AI options codified into blueprints or constructs and permit groups to deploy and use them. This group can present a platform that abstracts away these constructs with a user-friendly and built-in API and supply further companies resembling LLMOps, knowledge administration, FinOps, and extra. The next diagram illustrates these choices.

Establishing blueprints and constructs for generative AI runtimes, APIs, prompts, and orchestration resembling LangChain, LiteLLM, and so forth will simplify adoption of generative AI and enhance total protected utilization. Providing customary APIs with entry controls, constant AI, and knowledge and value administration makes utilization easy, cost-efficient, and safe.

For extra details about the right way to implement isolation of sources in a multi-tenant structure and key patterns in isolation methods whereas constructing options on AWS, seek advice from the whitepaper SaaS Tenant Isolation Methods.

Conclusion

By specializing in the operational excellence pillar of the Properly-Architected Framework from a generative AI lens, enterprises can scale their generative AI initiatives with confidence, constructing options which can be safe, cost-effective, and compliant. Introducing a standardized skeleton framework for generative AI runtimes, prompts, and orchestration will empower your group to seamlessly combine generative AI capabilities into your present workflows.

As a subsequent step, you possibly can set up proactive monitoring and alerting, serving to your enterprise swiftly detect and mitigate potential points, such because the technology of biased or dangerous output.

Don’t wait—take this proactive stance in the direction of adopting the perfect practices. Conduct common audits of your generative AI programs to keep up moral AI practices. Put money into coaching your group on the generative AI operational excellence methods. By taking these actions now, you’ll be nicely positioned to harness the transformative potential of generative AI whereas navigating the complexities of this expertise properly.

Concerning the Authors

Akarsha Sehwag is a Knowledge Scientist and ML Engineer in AWS Skilled Companies with over 5 years of expertise constructing ML primarily based companies and merchandise. Leveraging her experience in Pc Imaginative and prescient and Deep Studying, she empowers clients to harness the ability of the ML in AWS cloud effectively. With the appearance of Generative AI, she labored with quite a few clients to establish good use-cases, and constructing it into production-ready options. Her various pursuits span improvement, entrepreneurship, and analysis.

Akarsha Sehwag is a Knowledge Scientist and ML Engineer in AWS Skilled Companies with over 5 years of expertise constructing ML primarily based companies and merchandise. Leveraging her experience in Pc Imaginative and prescient and Deep Studying, she empowers clients to harness the ability of the ML in AWS cloud effectively. With the appearance of Generative AI, she labored with quite a few clients to establish good use-cases, and constructing it into production-ready options. Her various pursuits span improvement, entrepreneurship, and analysis.

Malcolm Orr is a principal engineer at AWS and has an extended historical past of constructing platforms and distributed programs utilizing AWS companies. He brings a structured – programs, view to generative AI and helps outline how clients can undertake GenAI safely, securely and affordably throughout their group.

Malcolm Orr is a principal engineer at AWS and has an extended historical past of constructing platforms and distributed programs utilizing AWS companies. He brings a structured – programs, view to generative AI and helps outline how clients can undertake GenAI safely, securely and affordably throughout their group.

Tanvi Singhal is a Knowledge Scientist inside AWS Skilled Companies. Her abilities and areas of experience embody knowledge science, machine studying, and large knowledge. She helps clients in growing Machine studying fashions and MLops options throughout the cloud. Previous to becoming a member of AWS, she was additionally a guide in varied industries resembling Transportation Networking, Retail and Monetary Companies. She is obsessed with enabling clients on their knowledge/AI journey to the cloud.

Tanvi Singhal is a Knowledge Scientist inside AWS Skilled Companies. Her abilities and areas of experience embody knowledge science, machine studying, and large knowledge. She helps clients in growing Machine studying fashions and MLops options throughout the cloud. Previous to becoming a member of AWS, she was additionally a guide in varied industries resembling Transportation Networking, Retail and Monetary Companies. She is obsessed with enabling clients on their knowledge/AI journey to the cloud.

Zorina Alliata is a Principal AI Strategist, working with world clients to seek out options that pace up operations and improve processes utilizing Synthetic Intelligence and Machine Studying. Zorina helps firms throughout a number of industries establish methods and tactical execution plans for his or her AI use circumstances, platforms, and AI at scale implementations.

Zorina Alliata is a Principal AI Strategist, working with world clients to seek out options that pace up operations and improve processes utilizing Synthetic Intelligence and Machine Studying. Zorina helps firms throughout a number of industries establish methods and tactical execution plans for his or her AI use circumstances, platforms, and AI at scale implementations.