In in the present day’s digital age, social media has revolutionized the best way manufacturers work together with their shoppers, creating a necessity for dynamic and interesting content material that resonates with their target market. There’s rising competitors for client consideration on this house; content material creators and influencers face fixed challenges to provide new, participating, and brand-consistent content material. The challenges come from three key components: the necessity for speedy content material manufacturing, the need for personalised content material that’s each charming and visually interesting and displays the distinctive pursuits of the patron, and the need for content material that’s according to a model’s identification, messaging, aesthetics, and tone.

Historically, the content material creation course of has been a time-consuming job involving a number of steps akin to ideation, analysis, writing, modifying, design, and assessment. This gradual cycle of creation doesn’t match for the speedy tempo of social media.

Generative AI gives new potentialities to handle this problem and can be utilized by content material groups and influencers to boost their creativity and engagement whereas sustaining model consistency. Extra particularly, multimodal capabilities of huge language fashions (LLMs) enable us to create the wealthy, participating content material spanning textual content, photos, audio, and video codecs which are omnipresent in promoting, advertising, and social media content material. With current developments in imaginative and prescient LLMs, creators can use visible enter, akin to reference photos, to begin the content material creation course of. Picture similarity search and textual content semantic search additional improve the method by shortly retrieving related content material and context.

On this publish, we stroll you thru a step-by-step course of to create a social media content material generator app utilizing imaginative and prescient, language, and embedding fashions (Anthropic’s Claude 3, Amazon Titan Picture Generator, and Amazon Titan Multimodal Embeddings) by Amazon Bedrock API and Amazon OpenSearch Serverless. Amazon Bedrock is a totally managed service that gives entry to high-performing basis fashions (FMs) from main AI firms by a single API. OpenSearch Serverless is a totally managed service that makes it simpler to retailer vectors and different knowledge varieties in an index and lets you carry out sub second question latency when looking out billions of vectors and measuring the semantic similarity.

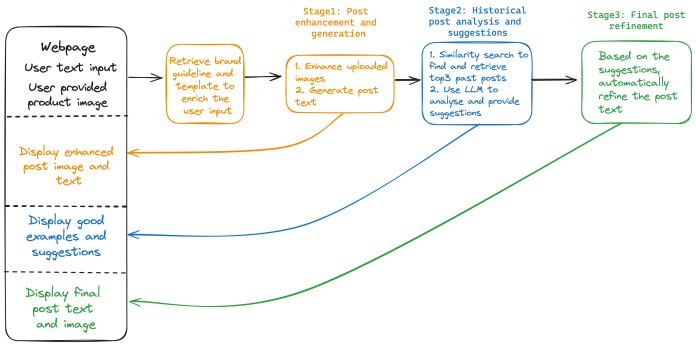

Right here’s how the proposed course of for content material creation works:

- First, the person (content material crew or advertising crew) uploads a product picture with a easy background (akin to a purse). Then, they supply pure language descriptions of the scene and enhancements they want to add to the picture as a immediate (akin to “Christmas vacation decorations”).

- Subsequent, Amazon Titan Picture Generator creates the improved picture based mostly on the supplied situation.

- Then, we generate wealthy and interesting textual content that describes the picture whereas aligning with model tips and tone utilizing Claude 3.

- After the draft (textual content and picture) is created, our resolution performs multimodal similarity searches in opposition to historic posts to seek out related posts and achieve inspiration and suggestions to boost the draft publish.

- Lastly, based mostly on the generated suggestions, the publish textual content is additional refined and supplied to the person on the webpage. The next diagram illustrates the end-to-end new content material creation course of.

Answer overview

On this resolution, we begin with knowledge preparation, the place the uncooked datasets may be saved in an Amazon Easy Storage Service (Amazon S3) bucket. We offer a Jupyter pocket book to preprocess the uncooked knowledge and use the Amazon Titan Multimodal Embeddings mannequin to transform the picture and textual content into embedding vectors. These vectors are then saved on OpenSearch Serverless as collections, as proven within the following determine.

Subsequent is the content material technology. The GUI webpage is hosted utilizing a Streamlit utility, the place the person can present an preliminary product picture and a quick description of how they anticipate the enriched picture to look. From the applying, the person also can choose the model (which is able to hyperlink to a selected model template later), select the picture model (akin to photographic or cinematic), and choose the tone for the publish textual content (akin to formal or informal).

After all of the configurations are supplied, the content material creation course of, proven within the following determine, is launched.

In stage 1, the answer retrieves the brand-specific template and tips from a CSV file. In a manufacturing setting, you can keep the model template desk in Amazon DynamoDB for scalability, reliability, and upkeep. The person enter is used to generate the enriched picture with the Amazon Titan Picture Generator. Along with all the opposite data, it’s fed into the Claude 3 mannequin, which has imaginative and prescient functionality, to generate the preliminary publish textual content that intently aligns with the model tips and the enriched picture. On the finish of this stage, the enriched picture and preliminary publish textual content are created and despatched again to the GUI to show to customers.

In stage 2, we mix the publish textual content and picture and use the Amazon Titan Multimodal Embeddings mannequin to generate the embedding vector. Multimodal embedding fashions combine data from totally different knowledge varieties, akin to textual content and pictures, right into a unified illustration. This permits trying to find photos utilizing textual content descriptions, figuring out related photos based mostly on visible content material, or combining each textual content and picture inputs to refine search outcomes. On this resolution, the multimodal embedding vector is used to go looking and retrieve the highest three related historic posts from the OpenSearch vector retailer. The retrieved outcomes are fed into the Anthropic’s Claude 3 mannequin to generate a caption, present insights on why these historic posts are participating, and supply suggestions on how the person can enhance their publish.

In stage 3, based mostly on the suggestions from stage 2, the answer robotically refines the publish textual content and offers a last model to the person. The person has the pliability to pick out the model they like and make modifications earlier than publishing. For the end-to-end content material technology course of, steps are orchestrated with the Streamlit utility.

The entire course of is proven within the following picture:

Implementation steps

This resolution has been examined in AWS Area us-east-1. Nevertheless, it will probably additionally work in different Areas the place the next companies can be found. Ensure you have the next arrange earlier than transferring ahead:

We use Amazon SageMaker Studio to generate historic publish embeddings and save these embedding vectors to OpenSearch Serverless. Moreover, you’ll run the Streamlit app from the SageMaker Studio terminal to visualise and check the answer. Testing the Streamlit app in a SageMaker setting is meant for a short lived demo. For manufacturing, we advocate deploying the Streamlit app on Amazon Elastic Compute Cloud (Amazon EC2) or Amazon Elastic Container Service (Amazon ECS) companies with correct safety measures akin to authentication and authorization.

We use the next fashions from Amazon Bedrock within the resolution. Please see Mannequin help by AWS Area and choose the Area that helps all three fashions:

- Amazon Titan Multimodal Embeddings Mannequin

- Amazon Titan Picture Generator

- Claude 3 Sonnet

Arrange a JupyterLab house on SageMaker Studio

JupyterLab house is a personal or shared house inside Sagemaker Studio that manages the storage and compute assets wanted to run the JupyterLab utility.

To arrange a JupyterLab house

- Register to your AWS account and open the AWS Administration Console. Go to SageMaker Studio.

- Choose your person profile and select Open Studio.

- From Purposes within the prime left, select JupyterLab.

- If you have already got a JupyterLab house, select Run. If you don’t, select Create JupyterLab House to create one. Enter a reputation and select Create House.

- Change the occasion to t3.massive and select Run House.

- Inside a minute, you must see that the JupyterLab house is prepared. Select Open JupyterLab.

- Within the JupyterLab launcher window, select Terminal.

- Run the next command on the terminal to obtain the pattern code from Github:

Generate pattern posts and compute multimodal embeddings

Within the code repository, we offer some pattern product photos (bag, automotive, fragrance, and candle) that had been created utilizing the Amazon Titan Picture Generator mannequin. Subsequent, you may generate some artificial social media posts utilizing the pocket book: synthetic-data-generation.ipynb through the use of the next steps. The generated posts’ texts are saved within the metadata.jsonl file (should you ready your individual product photos and publish texts, you may skip this step). Then, compute multimodal embeddings for the pairs of photos and generated texts. Lastly, ingest the multimodal embeddings right into a vector retailer on Amazon OpenSearch Serverless.

To generate pattern posts

- In JupyterLab, select File Browser and navigate to the folder

social-media-generator/embedding-generation. - Open the pocket book

synthetic-data-generation.ipynb. - Select the default Python 3 kernel and Information Science 3.0 picture, then comply with the directions within the pocket book.

- At this stage, you should have pattern posts which are created and out there in

data_mapping.csv. - Open the pocket book

multimodal_embedding_generation.ipynb. The pocket book first creates the multimodal embeddings for the post-image pair. It then ingests the computed embeddings right into a vector retailer on Amazon OpenSearch Serverless. - On the finish of the pocket book, you must be capable of carry out a easy question to the gathering as proven within the following instance:

The preparation steps are full. If you wish to check out the answer straight, you may skip to Run the answer with Streamlit App to shortly check the answer in your SageMaker setting. Nevertheless, if you need a extra detailed understanding of every step’s code and explanations, proceed studying.

Generate a social media publish (picture and textual content) utilizing FMs

On this resolution, we use FMs by Amazon Bedrock for content material creation. We begin by enhancing the enter product picture utilizing the Amazon Titan Picture Generator mannequin, which provides a dynamically related background across the goal product.

The get_titan_ai_request_body perform creates a JSON request physique for the Titan Picture Generator mannequin, utilizing its Outpainting characteristic. It accepts 4 parameters: outpaint_prompt (for instance, “Christmas tree, vacation ornament” or “Mom’s Day, flowers, heat lights”), negative_prompt (components to exclude from the generated picture), mask_prompt (specifies areas to retain, akin to “bag” or “automotive”), and image_str (the enter picture encoded as a base64 string).

The generate_image perform requires model_id and physique (the request physique from get_titan_ai_request_body). It invokes the mannequin utilizing bedrock.invoke_model and returns the response containing the base64-encoded generated picture.

Lastly, the code snippet calls get_titan_ai_request_body with the supplied prompts and enter picture string, then passes the request physique to generate_image, ensuing within the enhanced picture.

The next photos showcase the improved variations generated based mostly on enter prompts like “Christmas tree, vacation ornament, heat lights,” a particular place (akin to bottom-middle), and a model (“Luxurious Model”). These settings affect the output photos. If the generated picture is unsatisfactory, you may repeat the method till you obtain the specified end result.

Subsequent, generate the publish textual content, bearing in mind the person inputs, model tips (supplied within the brand_guideline.csv file, which you’ll exchange with your individual knowledge), and the improved picture generated from the earlier step.

The generate_text_with_claude perform is the higher-level perform that handles the picture and textual content enter, prepares the required knowledge, and calls generate_vision_answer to work together with the Amazon Bedrock mannequin (Claude 3 fashions) and obtain the specified response. The generate_vision_answer perform performs the core interplay with the Amazon Bedrock mannequin, processes the mannequin’s response, and returns it to the caller. Collectively, they allow producing textual content responses based mostly on mixed picture and textual content inputs.

Within the following code snippet, an preliminary publish immediate is constructed utilizing formatting placeholders for varied components akin to position, product identify, goal model, tone, hashtag, copywriting, and model messaging. These components are supplied within the brand_guideline.csv file to make it possible for the generated textual content aligns with the model preferences and tips. This preliminary immediate is then handed to the generate_text_with_claude perform, together with the improved picture to generate the ultimate publish textual content.

The next instance exhibits the generated publish textual content. It offers an in depth description of the product, aligns properly with the model tips, and incorporates components from the picture (such because the Christmas tree). Moreover, we instructed the mannequin to incorporate hashtags and emojis the place applicable, and the outcomes show that it adopted the immediate directions successfully.

|

Submit textual content: Elevate your model with Luxurious Model’s newest masterpiece. Crafted with timeless class and superior high quality, this beautiful bag embodies distinctive craftsmanship. Indulge within the epitome of sophistication and let or not it’s your fixed companion for all times’s grandest moments. 🎄✨ #LuxuryBrand #TimelessElegance #ExclusiveCollection |

Retrieve and analyze the highest three related posts

The subsequent step entails utilizing the generated picture and textual content to seek for the highest three related historic posts from a vector database. We use the Amazon Titan Multimodal Embeddings mannequin to create embedding vectors, that are saved in Amazon OpenSearch Serverless. The related historic posts, which could have many likes, are displayed on the applying webpage to present customers an concept of what profitable social media posts appear to be. Moreover, we analyze these retrieved posts and supply actionable enchancment suggestions for the person. The next code snippet exhibits the implementation of this step.

The code defines two features: find_similar_items and process_images. find_similar_items performs semantic search utilizing the k-nearest neighbors (kNN) algorithm on the enter picture immediate. It computes a multimodal embedding for the picture and question immediate, constructs an OpenSearch kNN question, runs the search, and retrieves the highest matching photos and publish texts. process_images analyzes a listing of comparable photos in parallel utilizing multiprocessing. It generates evaluation texts for the photographs by calling generate_text_with_claude with an evaluation immediate, operating the calls in parallel, and accumulating the outcomes.

Within the snippet, find_similar_items is known as to retrieve the highest three related photos and publish texts based mostly on the enter picture and a mixed question immediate. process_images is then referred to as to generate evaluation texts for the primary three related photos in parallel, displaying the outcomes concurrently.

An instance of historic publish retrieval and evaluation is proven within the following screenshot. Submit photos are listed on the left. On the best, the total textual content content material of every publish is retrieved and displayed. We then use an LLM mannequin to generate a complete scene description for the publish picture, which might function a immediate to encourage picture technology. Subsequent, the LLM mannequin generates computerized suggestions for enchancment. On this resolution, we use the Claude 3 Sonnet mannequin for textual content technology.

As the ultimate step, the answer incorporates the suggestions and refines the publish textual content to make it extra interesting and more likely to entice extra consideration from social media customers.

Run the answer with Streamlit App

You possibly can obtain the answer from this Git repository. Use the next steps to run the Streamlit utility and shortly check out the answer in your SageMaker Studio setting.

- In SageMaker Studio, select SageMaker Traditional, then begin an occasion underneath your person profile.

- After you have got the JupyterLab setting operating, clone the code repository and navigate to the

streamlit-appfolder in a terminal: - You will notice a webpage hyperlink generated within the terminal, which is able to look just like the next:

https://[USER-PROFILE-ID].studio.[REGION].sagemaker.aws/jupyter/default/proxy/8501/

- To examine the standing of the Streamlit utility, run

sh standing.shwithin the terminal. - To close down the applying, run

sh cleanup.sh.

With the Streamlit app downloaded, you may start by offering preliminary prompts and choosing the merchandise you wish to retain within the picture. You’ve the choice to add a picture out of your native machine, plug in your digicam to take an preliminary product image on the fly, or shortly check the answer by choosing a pre-uploaded picture instance. You possibly can then optionally modify the product’s location within the picture by setting its place. Subsequent, choose the model for the product. Within the demo, we use the posh model and the quick style model, every with its personal preferences and tips. Lastly, select the picture model. Select Submit to begin the method.

The appliance will robotically deal with post-image and textual content technology, retrieve related posts for evaluation, and refine the ultimate publish. This end-to-end course of can take roughly 30 seconds. For those who aren’t glad with the outcome, you may repeat the method a number of instances. An end-to-end demo is proven beneath.

Inspiration from historic posts utilizing picture similarity search

If you end up missing concepts for preliminary prompts to create the improved picture, think about using a reverse search method. In the course of the retrieve and analyze posts step talked about earlier, scene descriptions are additionally generated, which might function inspiration. You possibly can modify these descriptions as wanted and use them to generate new photos and accompanying textual content. This methodology successfully makes use of current content material to stimulate creativity and improve the applying’s output.

Within the previous instance, the highest three related photos to our generated photos present fragrance photos posted to social media by customers. This perception helps manufacturers perceive their target market and the environments wherein their merchandise are used. By utilizing this data, manufacturers can create dynamic and interesting content material that resonates with their customers. For example, within the instance supplied, “a hand holding a glass fragrance bottle within the foreground, with a scenic mountain panorama seen within the background,” is exclusive and visually extra interesting than a uninteresting image of “a fragrance bottle standing on a department in a forest.” This illustrates how capturing the best scene and context can considerably improve the attractiveness and affect of social media content material.

Clear up

Once you end experimenting with this resolution, use the next steps to scrub up the AWS assets to keep away from pointless prices:

- Navigate to the Amazon S3 console and delete the S3 bucket and knowledge created for this resolution.

- Navigate to the Amazon OpenSearch Service console, select Serverless, after which choose Assortment. Delete the gathering that was created for storing the historic publish embedding vectors.

- Navigate to the Amazon SageMaker console. Select Admin configurations and choose Domains. Choose your person profile and delete the operating utility from Areas and Apps.

Conclusion

On this weblog publish, we launched a multimodal social media content material generator resolution that makes use of FMs from Amazon Bedrock, such because the Amazon Titan Picture Generator, Claude 3, and Amazon Titan Multimodal Embeddings. The answer streamlines the content material creation course of, enabling manufacturers and influencers to provide participating and brand-consistent content material quickly. You possibly can check out the answer utilizing this code pattern.

The answer entails enhancing product photos with related backgrounds utilizing the Amazon Titan Picture Generator, producing brand-aligned textual content descriptions by Claude 3, and retrieving related historic posts utilizing Amazon Titan Multimodal Embeddings. It offers actionable suggestions to refine content material for higher viewers resonance. This multimodal AI method addresses challenges in speedy content material manufacturing, personalization, and model consistency, empowering creators to spice up creativity and engagement whereas sustaining model identification.

We encourage manufacturers, influencers, and content material groups to discover this resolution and use the capabilities of FMs to streamline their content material creation processes. Moreover, we invite builders and researchers to construct upon this resolution, experiment with totally different fashions and methods, and contribute to the development of multimodal AI within the realm of social media content material technology.

See this announcement weblog publish for details about the Amazon Titan Picture Generator and Amazon Titan Multimodal Embeddings mannequin. For extra data, see Amazon Bedrock and Amazon Titan in Amazon Bedrock.

Concerning the Authors

Ying Hou, PhD, is a Machine Studying Prototyping Architect at AWS, specialising in constructing GenAI purposes with clients, together with RAG and agent options. Her experience spans GenAI, ASR, Laptop Imaginative and prescient, NLP, and time sequence prediction fashions. Outdoors of labor, she enjoys spending high quality time along with her household, getting misplaced in novels, and mountaineering within the UK’s nationwide parks.

Ying Hou, PhD, is a Machine Studying Prototyping Architect at AWS, specialising in constructing GenAI purposes with clients, together with RAG and agent options. Her experience spans GenAI, ASR, Laptop Imaginative and prescient, NLP, and time sequence prediction fashions. Outdoors of labor, she enjoys spending high quality time along with her household, getting misplaced in novels, and mountaineering within the UK’s nationwide parks.

Bishesh Adhikari, is a Senior ML Prototyping Architect at AWS with over a decade of expertise in software program engineering and AI/ML. Specializing in GenAI, LLMs, NLP, CV, and GeoSpatial ML, he collaborates with AWS clients to construct options for difficult issues by co-development. His experience accelerates clients’ journey from idea to manufacturing, tackling complicated use instances throughout varied industries. In his free time, he enjoys mountaineering, touring, and spending time with household and buddies.

Bishesh Adhikari, is a Senior ML Prototyping Architect at AWS with over a decade of expertise in software program engineering and AI/ML. Specializing in GenAI, LLMs, NLP, CV, and GeoSpatial ML, he collaborates with AWS clients to construct options for difficult issues by co-development. His experience accelerates clients’ journey from idea to manufacturing, tackling complicated use instances throughout varied industries. In his free time, he enjoys mountaineering, touring, and spending time with household and buddies.