In immediately’s quickly altering world, monitoring the well being of our planet’s vegetation is extra vital than ever. Vegetation performs an important function in sustaining an ecological stability, offering sustenance, and appearing as a carbon sink. Historically, monitoring vegetation well being has been a frightening activity. Strategies equivalent to discipline surveys and handbook satellite tv for pc knowledge evaluation usually are not solely time-consuming, but in addition require vital assets and area experience. These conventional approaches are cumbersome. This usually results in delays in knowledge assortment and evaluation, making it tough to trace and reply swiftly to environmental adjustments. Moreover, the excessive prices related to these strategies restrict their accessibility and frequency, hindering complete and ongoing international vegetation monitoring efforts at a planetary scale. In gentle of those challenges, we’ve got developed an modern answer to streamline and improve the effectivity of vegetation monitoring processes on a world scale.

Transitioning from the standard, labor-intensive strategies of monitoring vegetation well being, Amazon SageMaker geospatial capabilities provide a streamlined, cost-effective answer. Amazon SageMaker helps geospatial machine studying (ML) capabilities, permitting knowledge scientists and ML engineers to construct, practice, and deploy ML fashions utilizing geospatial knowledge. These geospatial capabilities open up a brand new world of prospects for environmental monitoring. With SageMaker, customers can entry a big selection of geospatial datasets, effectively course of and enrich this knowledge, and speed up their growth timelines. Duties that beforehand took days and even weeks to perform can now be achieved in a fraction of the time.

On this publish, we show the facility of SageMaker geospatial capabilities by mapping the world’s vegetation in below 20 minutes. This instance not solely highlights the effectivity of SageMaker, but in addition its influence how geospatial ML can be utilized to observe the atmosphere for sustainability and conservation functions.

Determine areas of curiosity

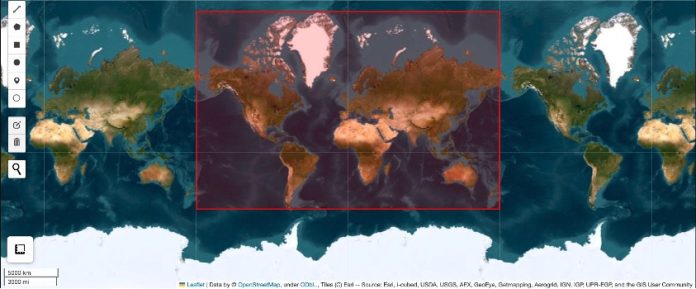

We start by illustrating how SageMaker could be utilized to research geospatial knowledge at a world scale. To get began, we observe the steps outlined in Getting Began with Amazon SageMaker geospatial capabilities. We begin with the specification of the geographical coordinates that outline a bounding field overlaying the areas of curiosity. This bounding field acts as a filter to pick out solely the related satellite tv for pc photos that cowl the Earth’s land plenty.

Knowledge acquisition

SageMaker geospatial capabilities present entry to a variety of public geospatial datasets, together with Sentinel-2, Landsat 8, Copernicus DEM, and NAIP. For our vegetation mapping undertaking, we’ve chosen Sentinel-2 for its international protection and replace frequency. The Sentinel-2 satellite tv for pc captures photos of Earth’s land floor at a decision of 10 meters each 5 days. We choose the primary week of December 2023 on this instance. To verify we cowl many of the seen earth floor, we filter for photos with lower than 10% cloud protection. This fashion, our evaluation relies on clear and dependable imagery.

By using the search_raster_data_collection operate from SageMaker geospatial, we recognized 8,581 distinctive Sentinel-2 photos taken within the first week of December 2023. To validate the accuracy in our choice, we plotted the footprints of those photos on a map, confirming that we had the right photos for our evaluation.

SageMaker geospatial processing jobs

When querying knowledge with SageMaker geospatial capabilities, we acquired complete particulars about our goal photos, together with the information footprint, properties round spectral bands, and hyperlinks for direct entry. With these hyperlinks, we will bypass conventional reminiscence and storage-intensive strategies of first downloading and subsequently processing photos domestically—a activity made much more daunting by the dimensions and scale of our dataset, spanning over 4 TB. Every of the 8,000 photos are giant in dimension, have a number of channels, and are individually sized at roughly 500 MB. Processing a number of terabytes of information on a single machine can be time-prohibitive. Though establishing a processing cluster is an alternate, it introduces its personal set of complexities, from knowledge distribution to infrastructure administration. SageMaker geospatial streamlines this with Amazon SageMaker Processing. We use the purpose-built geospatial container with SageMaker Processing jobs for a simplified, managed expertise to create and run a cluster. With only a few strains of code, you may scale out your geospatial workloads with SageMaker Processing jobs. You merely specify a script that defines your workload, the situation of your geospatial knowledge on Amazon Easy Storage Service (Amazon S3), and the geospatial container. SageMaker Processing provisions cluster assets so that you can run city-, country-, or continent-scale geospatial ML workloads.

For our undertaking, we’re utilizing 25 clusters, with every cluster comprising 20 situations, to scale out our geospatial workload. Subsequent, we divided the 8,581 photos into 25 batches for environment friendly processing. Every batch comprises roughly 340 photos. These batches are then evenly distributed throughout the machines in a cluster. All batch manifests are uploaded to Amazon S3, prepared for the processing job, so every section is processed swiftly and effectively.

With our enter knowledge prepared, we now flip to the core evaluation that can reveal insights into vegetation well being by the Normalized Distinction Vegetation Index (NDVI). NDVI is calculated from the distinction between Close to-infrared (NIR) and Purple reflectances, normalized by their sum, yielding values that vary from -1 to 1. Increased NDVI values sign dense, wholesome vegetation, a price of zero signifies no vegetation, and unfavorable values often level to water our bodies. This index serves as a vital device for assessing vegetation well being and distribution. The next is an instance of what NDVI appears like.

Now we’ve got the compute logic outlined, we’re prepared to start out the geospatial SageMaker Processing job. This entails an easy three-step course of: establishing the compute cluster, defining the computation specifics, and organizing the enter and output particulars.

First, to arrange the cluster, we resolve on the quantity and sort of situations required for the job, ensuring they’re well-suited for geospatial knowledge processing. The compute atmosphere itself is ready by choosing a geospatial picture that comes with all generally used packages for processing geospatial knowledge.

Subsequent, for the enter, we use the beforehand created manifest that lists all picture hyperlinks. We additionally designate an S3 location to save lots of our outcomes.

With these parts configured, we’re capable of provoke a number of processing jobs directly, permitting them to function concurrently for effectivity.

After you launch the job, SageMaker routinely spins up the required situations and configures the cluster to course of the pictures listed in your enter manifest. This complete setup operates seamlessly, without having your hands-on administration. To observe and handle the processing jobs, you should utilize the SageMaker console. It affords real-time updates on the standing and completion of your processing duties. In our instance, it took below 20 minutes to course of all 8,581 photos with 500 situations. The scalability of SageMaker permits for quicker processing instances if wanted, just by rising the variety of situations.

Conclusion

The ability and effectivity of SageMaker geospatial capabilities have opened new doorways for environmental monitoring, significantly within the realm of vegetation mapping. Via this instance, we showcased the right way to course of over 8,500 satellite tv for pc photos in lower than 20 minutes. We not solely demonstrated the technical feasibility, but in addition showcased the effectivity good points from utilizing the cloud for environmental evaluation. This strategy illustrates a major leap from conventional, resource-intensive strategies to a extra agile, scalable, and cost-effective strategy. The flexibleness to scale processing assets up or down as wanted, mixed with the convenience of accessing and analyzing huge datasets, positions SageMaker as a transformative device within the discipline of geospatial evaluation. By simplifying the complexities related to large-scale knowledge processing, SageMaker permits scientists, researchers, and companies stakeholders to focus extra on deriving insights and fewer on infrastructure and knowledge administration.

As we glance to the longer term, the mixing of ML and geospatial analytics guarantees to additional improve our understanding of the planet’s ecological programs. The potential to observe adjustments in actual time, predict future tendencies, and reply with extra knowledgeable selections can considerably contribute to international conservation efforts. This instance of vegetation mapping is only the start for working planetary-scale ML. See Amazon SageMaker geospatial capabilities to be taught extra.

Concerning the Writer

Xiong Zhou is a Senior Utilized Scientist at AWS. He leads the science workforce for Amazon SageMaker geospatial capabilities. His present space of analysis contains LLM analysis and knowledge era. In his spare time, he enjoys working, taking part in basketball and spending time together with his household.

Xiong Zhou is a Senior Utilized Scientist at AWS. He leads the science workforce for Amazon SageMaker geospatial capabilities. His present space of analysis contains LLM analysis and knowledge era. In his spare time, he enjoys working, taking part in basketball and spending time together with his household.

Anirudh Viswanathan is a Sr Product Supervisor, Technical – Exterior Companies with the SageMaker geospatial ML workforce. He holds a Masters in Robotics from Carnegie Mellon College, an MBA from the Wharton College of Enterprise, and is called inventor on over 40 patents. He enjoys long-distance working, visiting artwork galleries and Broadway exhibits.

Anirudh Viswanathan is a Sr Product Supervisor, Technical – Exterior Companies with the SageMaker geospatial ML workforce. He holds a Masters in Robotics from Carnegie Mellon College, an MBA from the Wharton College of Enterprise, and is called inventor on over 40 patents. He enjoys long-distance working, visiting artwork galleries and Broadway exhibits.

Janosch Woschitz is a Senior Options Architect at AWS, specializing in AI/ML. With over 15 years of expertise, he helps prospects globally in leveraging AI and ML for modern options and constructing ML platforms on AWS. His experience spans machine studying, knowledge engineering, and scalable distributed programs, augmented by a powerful background in software program engineering and business experience in domains equivalent to autonomous driving.

Janosch Woschitz is a Senior Options Architect at AWS, specializing in AI/ML. With over 15 years of expertise, he helps prospects globally in leveraging AI and ML for modern options and constructing ML platforms on AWS. His experience spans machine studying, knowledge engineering, and scalable distributed programs, augmented by a powerful background in software program engineering and business experience in domains equivalent to autonomous driving.

Li Erran Li is the utilized science supervisor at humain-in-the-loop providers, AWS AI, Amazon. His analysis pursuits are 3D deep studying, and imaginative and prescient and language illustration studying. Beforehand he was a senior scientist at Alexa AI, the top of machine studying at Scale AI and the chief scientist at Pony.ai. Earlier than that, he was with the notion workforce at Uber ATG and the machine studying platform workforce at Uber engaged on machine studying for autonomous driving, machine studying programs and strategic initiatives of AI. He began his profession at Bell Labs and was adjunct professor at Columbia College. He co-taught tutorials at ICML’17 and ICCV’19, and co-organized a number of workshops at NeurIPS, ICML, CVPR, ICCV on machine studying for autonomous driving, 3D imaginative and prescient and robotics, machine studying programs and adversarial machine studying. He has a PhD in pc science at Cornell College. He’s an ACM Fellow and IEEE Fellow.

Li Erran Li is the utilized science supervisor at humain-in-the-loop providers, AWS AI, Amazon. His analysis pursuits are 3D deep studying, and imaginative and prescient and language illustration studying. Beforehand he was a senior scientist at Alexa AI, the top of machine studying at Scale AI and the chief scientist at Pony.ai. Earlier than that, he was with the notion workforce at Uber ATG and the machine studying platform workforce at Uber engaged on machine studying for autonomous driving, machine studying programs and strategic initiatives of AI. He began his profession at Bell Labs and was adjunct professor at Columbia College. He co-taught tutorials at ICML’17 and ICCV’19, and co-organized a number of workshops at NeurIPS, ICML, CVPR, ICCV on machine studying for autonomous driving, 3D imaginative and prescient and robotics, machine studying programs and adversarial machine studying. He has a PhD in pc science at Cornell College. He’s an ACM Fellow and IEEE Fellow.

Amit Modi is the product chief for SageMaker MLOps, ML Governance, and Accountable AI at AWS. With over a decade of B2B expertise, he builds scalable merchandise and groups that drive innovation and ship worth to prospects globally.

Amit Modi is the product chief for SageMaker MLOps, ML Governance, and Accountable AI at AWS. With over a decade of B2B expertise, he builds scalable merchandise and groups that drive innovation and ship worth to prospects globally.

Kris Efland is a visionary expertise chief with a profitable observe document in driving product innovation and development for over 20 years. Kris has helped create new merchandise together with shopper electronics and enterprise software program throughout many industries, at each startups and huge corporations. In his present function at Amazon Internet Companies (AWS), Kris leads the Geospatial AI/ML class. He works on the forefront of Amazon’s fastest-growing ML service, Amazon SageMaker, which serves over 100,000 prospects worldwide. He lately led the launch of Amazon SageMaker’s new geospatial capabilities, a robust set of instruments that permit knowledge scientists and machine studying engineers to construct, practice, and deploy ML fashions utilizing satellite tv for pc imagery, maps, and placement knowledge. Earlier than becoming a member of AWS, Kris was the Head of Autonomous Car (AV) Instruments and AV Maps for Lyft, the place he led the corporate’s autonomous mapping efforts and toolchain used to construct and function Lyft’s fleet of autonomous automobiles. He additionally served because the Director of Engineering at HERE Applied sciences and Nokia and has co-founded a number of startups..

Kris Efland is a visionary expertise chief with a profitable observe document in driving product innovation and development for over 20 years. Kris has helped create new merchandise together with shopper electronics and enterprise software program throughout many industries, at each startups and huge corporations. In his present function at Amazon Internet Companies (AWS), Kris leads the Geospatial AI/ML class. He works on the forefront of Amazon’s fastest-growing ML service, Amazon SageMaker, which serves over 100,000 prospects worldwide. He lately led the launch of Amazon SageMaker’s new geospatial capabilities, a robust set of instruments that permit knowledge scientists and machine studying engineers to construct, practice, and deploy ML fashions utilizing satellite tv for pc imagery, maps, and placement knowledge. Earlier than becoming a member of AWS, Kris was the Head of Autonomous Car (AV) Instruments and AV Maps for Lyft, the place he led the corporate’s autonomous mapping efforts and toolchain used to construct and function Lyft’s fleet of autonomous automobiles. He additionally served because the Director of Engineering at HERE Applied sciences and Nokia and has co-founded a number of startups..