This put up is co-written Rodrigo Amaral, Ashwin Murthy and Meghan Stronach from Qualcomm.

On this put up, we introduce an revolutionary answer for end-to-end mannequin customization and deployment on the edge utilizing Amazon SageMaker and Qualcomm AI Hub. This seamless cloud-to-edge AI improvement expertise will allow builders to create optimized, extremely performant, and customized managed machine studying options the place you’ll be able to deliver you personal mannequin (BYOM) and produce your personal information (BYOD) to fulfill assorted enterprise necessities throughout industries. From real-time analytics and predictive upkeep to customized buyer experiences and autonomous programs, this strategy caters to various wants.

We show this answer by strolling you thru a complete step-by-step information on tips on how to fine-tune YOLOv8, a real-time object detection mannequin, on Amazon Net Providers (AWS) utilizing a customized dataset. The method makes use of a single ml.g5.2xlarge occasion (offering one NVIDIA A10G Tensor Core GPU) with SageMaker for fine-tuning. After fine-tuning, we present you tips on how to optimize the mannequin with Qualcomm AI Hub in order that it’s prepared for deployment throughout edge units powered by Snapdragon and Qualcomm platforms.

Enterprise problem

Immediately, many builders use AI and machine studying (ML) fashions to sort out a wide range of enterprise circumstances, from good identification and pure language processing (NLP) to AI assistants. Whereas open supply fashions supply a very good start line, they typically don’t meet the precise wants of the purposes being developed. That is the place mannequin customization turns into important, permitting builders to tailor fashions to their distinctive necessities and guarantee optimum efficiency for particular use circumstances.

As well as, on-device AI deployment is a game-changer for builders crafting use circumstances that demand immediacy, privateness, and reliability. By processing information regionally, edge AI minimizes latency, ensures delicate data stays on-device, and ensures performance even in poor connectivity. Builders are subsequently on the lookout for an end-to-end answer the place they cannot solely customise the mannequin but in addition optimize the mannequin to focus on on-device deployment. This permits them to supply responsive, safe, and sturdy AI purposes, delivering distinctive consumer experiences.

How can Amazon SageMaker and Qualcomm AI Hub assist?

BYOM and BYOD supply thrilling alternatives so that you can customise the mannequin of your selection, use your personal dataset, and deploy it in your goal edge machine. By this answer, we suggest utilizing SageMaker for mannequin fine-tuning and Qualcomm AI Hub for edge deployments, making a complete end-to-end mannequin deployment pipeline. This opens new prospects for mannequin customization and deployment, enabling builders to tailor their AI options to particular use circumstances and datasets.

SageMaker is a wonderful selection for mannequin coaching, as a result of it reduces the time and value to coach and tune ML fashions at scale with out the necessity to handle infrastructure. You possibly can benefit from the highest-performing ML compute infrastructure at the moment obtainable, and SageMaker can scale infrastructure from one to hundreds of GPUs. Since you pay just for what you utilize, you’ll be able to handle your coaching prices extra successfully. SageMaker distributed coaching libraries can robotically cut up giant fashions and coaching datasets throughout AWS GPU cases, or you should use third-party libraries, akin to DeepSpeed, Horovod, Totally Sharded Knowledge Parallel (FSDP), or Megatron. You possibly can prepare basis fashions (FMs) for weeks and months with out disruption by robotically monitoring and repairing coaching clusters.

After the mannequin is educated, you should use Qualcomm AI Hub to optimize, validate, and deploy these custom-made fashions on hosted units with Snapdragon and Qualcomm Applied sciences inside minutes. Qualcomm AI Hub is a developer-centric platform designed to streamline on-device AI improvement and deployment. AI Hub provides automated conversion and optimization of PyTorch or ONNX fashions for environment friendly on-device deployment utilizing TensorFlow Lite, ONNX Runtime, or Qualcomm AI Engine Direct SDK. It additionally has an present library of over 100 pre-optimized fashions for Qualcomm and Snapdragon platforms.

Qualcomm AI Hub has served greater than 800 firms and continues to develop its choices when it comes to fashions obtainable, platforms supported, and extra.

Utilizing SageMaker and Qualcomm AI Hub collectively can create new alternatives for speedy iteration on mannequin customization, offering entry to highly effective improvement instruments and enabling a clean workflow from cloud coaching to on-device deployment.

Answer structure

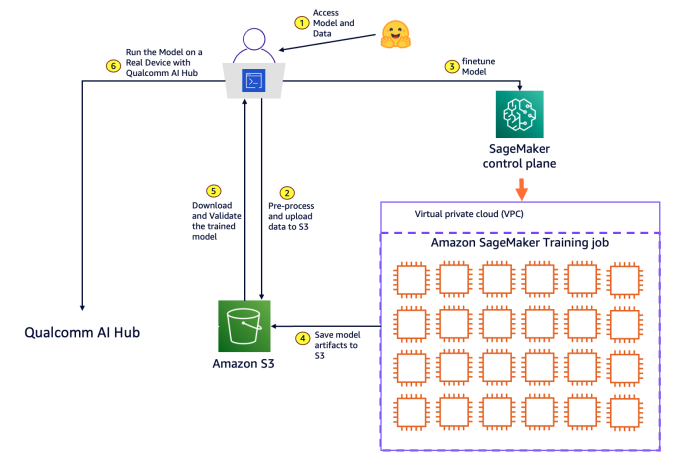

The next diagram illustrates the answer structure. Builders working of their native setting provoke the next steps:

- Choose an open supply mannequin and a dataset for mannequin customization from the Hugging Face repository.

- Pre-process the information into the format required by your mannequin for coaching, then add the processed information to Amazon Easy Storage Service (Amazon S3). Amazon S3 supplies a extremely scalable, sturdy, and safe object storage answer in your machine studying use case.

- Name the SageMaker management airplane API utilizing the SageMaker Python SDK for mannequin coaching. In response, SageMaker provisions a resilient distributed coaching cluster with the requested quantity and sort of compute cases to run the mannequin coaching. SageMaker additionally handles orchestration and displays the infrastructure for any faults.

- After the coaching is full, SageMaker spins down the cluster, and also you’re billed for the web coaching time in seconds. The ultimate mannequin artifact is saved to an S3 bucket.

- Pull the fine-tuned mannequin artifact from Amazon S3 to the native improvement setting and validate the mannequin accuracy.

- Use Qualcomm AI Hub to compile and profile the mannequin, working it on cloud-hosted units to ship efficiency metrics forward of downloading for deployment throughout edge units.

Use case stroll by means of

Think about a number one electronics producer aiming to reinforce its high quality management course of for printed circuit boards (PCBs) by implementing an automatic visible inspection system. Initially, utilizing an open supply imaginative and prescient mannequin, the producer collects and annotates a big dataset of PCB pictures, together with each faulty and non-defective samples.

This dataset, much like the keremberke/pcb-defect-segmentation dataset from HuggingFace, accommodates annotations for frequent defect courses akin to dry joints, incorrect installations, PCB harm, and brief circuits. With SageMaker, the producer trains a customized YOLOv8 mannequin (You Solely Look As soon as), developed by Ultralytics, to acknowledge these particular PCB defects. The mannequin is then optimized for deployment on the edge utilizing Qualcomm AI Hub, offering environment friendly efficiency on chosen platforms akin to industrial cameras or handheld units used within the manufacturing line.

This custom-made mannequin considerably improves the standard management course of by precisely detecting PCB defects in real-time. It reduces the necessity for handbook inspections and minimizes the chance of faulty PCBs progressing by means of the manufacturing course of. This results in improved product high quality, elevated effectivity, and substantial price financial savings.

Let’s stroll by means of this situation with an implementation instance.

Stipulations

For this walkthrough, you need to have the next:

- Jupyter Pocket book – The instance has been examined in Visible Studio Code with Jupyter Pocket book utilizing the Python 3.11.7 setting.

- An AWS account.

- Create an AWS Identification and Entry Administration (IAM) consumer with the

AmazonSageMakerFullAccesscoverage to allow you to run SageMaker APIs. Arrange your safety credentials for CLI. - Set up AWS Command Line Interface (AWS CLI) and use

aws configureto arrange your IAM credentials securely. - Create a job with the identify

sagemakerroleto be assumed by SageMaker. Add managed insurance policies AmazonS3FullAccess to provide SageMaker entry to your S3 buckets. - Ensure your account has the SageMaker Coaching useful resource sort restrict for ml.g5.2xlarge elevated to 1 utilizing the Service Quotas console.

- Observe the get began directions to put in the required Qualcomm AI Hub library and arrange your distinctive API token for Qualcomm AI Hub.

- Use the next command to clone the GitHub repository with the property for this use case. This repository consists of a pocket book that references coaching property.

The sm-qai-hub-examples/yolo listing accommodates all of the coaching scripts that you just would possibly have to deploy this pattern.

Subsequent, you’ll run the sagemaker_qai_hub_finetuning.ipynb pocket book to fine-tune the YOLOv8 mannequin on SageMaker and deploy it on the sting utilizing AI Hub. See the pocket book for extra particulars on every step. Within the following sections, we stroll you thru the important thing elements of fine-tuning the mannequin.

Step 1: Entry the mannequin and information

- Start by putting in the required packages in your Python setting. On the high of the pocket book, embody the next code snippet, which makes use of Python’s pip package deal supervisor to put in the required packages in your native runtime setting.

- Import the required libraries for the venture. Particularly, import the

Datasetclass from the Hugging Face datasets library and theYOLOclass from theultralyticslibrary. These libraries are essential in your work, as a result of they supply the instruments that you must entry and manipulate the dataset and work with the YOLO object detection mannequin.

Step 2: Pre-process and add information to S3

To fine-tune your YOLOv8 mannequin for detecting PCB defects, you’ll use the keremberke/pcb-defect-segmentation dataset from Hugging Face. This dataset consists of 189 pictures of chip defects (prepare: 128 pictures, validation: 25 pictures and take a look at: 36 pictures). These defects are annotated in COCO format.

YOLOv8 doesn’t acknowledge these courses out of the field, so you’ll map YOLOv8’s logits to establish these courses throughout mannequin fine-tuning, as proven within the following picture.

- Start by downloading the dataset from Hugging Face to the native disk and changing it to the required YOLO dataset construction utilizing the utility operate

CreateYoloHFDataset. This construction ensures that the YOLO API accurately hundreds and processes the pictures and labels through the coaching part. - Add the dataset to Amazon S3. This step is essential as a result of the dataset saved in S3 will function the enter information channel for the SageMaker coaching job. SageMaker will effectively handle the method of distributing this information throughout the coaching cluster, permitting every node to entry the required data for mannequin coaching.

Alternatively, you should use your personal customized dataset (non-Hugging Face) to fine-tune the YOLOv8 mannequin, so long as the dataset complies with the YOLOv8 dataset format.

Step 3: Wonderful-tune your YOLOv8 mannequin

3.1: Overview the coaching script

You’re now ready to fine-tune the mannequin utilizing the mannequin.prepare technique from the Ultralytics YOLO library.

We’ve ready a script known as train_yolov8.py that can carry out the next duties. Let’s shortly evaluation the important thing factors on this script earlier than you launch the coaching job.

- The coaching script will do the next: Load a YOLOv8 mannequin from the Ultralytics library

- Use the prepare technique to run fine-tuning that considers the mannequin information, adjusts its parameters, and optimizes its skill to precisely predict object courses and places in pictures.

After the mannequin is educated, the script runs inference to check the mannequin output and save the mannequin artifacts to a neighborhood Amazon S3 mapped folder

3.2: Launch the coaching

You’re now able to launch the coaching. You’ll use the SageMaker PyTorch coaching estimator to provoke coaching. The estimator simplifies the coaching course of by automating a number of of the important thing duties on this instance:

- The SageMaker estimator spins up a coaching cluster of 1 2xlarge occasion. SageMaker handles the setup and administration of those compute cases, which reduces the whole price of possession.

- The estimator additionally makes use of one of many pre-built containers managed by SageMaker—PyTorch, which incorporates an optimized compiled model of the PyTorch framework together with its required dependencies and GPU-specific libraries for accelerated computations.

The estimator.match() technique initiates the coaching course of with the desired enter information channels. Following is the code used to launch the coaching job together with the required parameters.

You possibly can observe a SageMaker coaching job by monitoring its standing utilizing the AWS Administration Console, AWS CLI, or AWS SDKs. To find out when the job is accomplished, verify for the Accomplished standing or arrange Amazon CloudWatch alarms to inform you when the job transitions to the Accomplished state.

Step 4 & 5: Save, obtain and validate the educated mannequin

The coaching course of generates mannequin artifacts that shall be saved to the S3 bucket laid out in output_path location. This instance makes use of the download_tar_and_untar utility to obtain the mannequin to a neighborhood drive.

- Run inference on this mannequin and visually validate how shut floor reality and mannequin predictions bounding containers align on take a look at pictures. The next code reveals tips on how to generate a picture mosaic utilizing a customized utility operate—

draw_bounding_boxes—that overlays a picture with floor reality and mannequin classification together with a confidence worth for sophistication prediction.

From the previous picture mosaic, you’ll be able to observe two distinct units of bounding containers: the cyan containers point out human annotations of defects on the PCB picture, whereas the pink containers symbolize the mannequin’s predictions of defects. Together with the expected class, you too can see the boldness worth for every prediction, which displays the standard of the YOLOv8 mannequin’s output.

After fine-tuning, YOLOv8 begins to precisely predict the PCB defect courses current within the customized dataset, although it hadn’t encountered these courses throughout mannequin pretraining. Moreover, the expected bounding containers are carefully aligned with the bottom reality, with confidence scores of higher than or equal to 0.5 typically. You possibly can additional enhance the mannequin’s efficiency with out the necessity for hyperparameter guesswork through the use of a SageMaker hyperparameter tuning job.

Step 6: Run the mannequin on an actual machine with Qualcomm AI Hub

Now that you just’re validated the fine-tuned mannequin on PyTorch, you need to run the mannequin on an actual machine.

Qualcomm AI Hub lets you do the next:

- Compile and optimize the PyTorch mannequin right into a format that may be run on a tool

- Run the compiled mannequin on a tool with a Snapdragon processor hosted in AWS machine farm

- Confirm on-device mannequin accuracy

- Measure on-device mannequin latency

To run the mannequin:

- Compile the mannequin.

Step one is changing the PyTorch mannequin right into a format that may run on the machine.

This instance makes use of a Home windows laptop computer powered by the Snapdragon X Elite processor. This machine makes use of the ONNX mannequin format, which you’ll configure throughout compilation.

As you get began, you’ll be able to see a listing of all of the units supported on Qualcomm AI Hub, by working qai-hub list-devices.

See Compiling Fashions to be taught extra about compilation on Qualcomm AI Hub.

- Inference the mannequin on an actual machine

Run the compiled mannequin on an actual cloud-hosted machine with Snapdragon utilizing the identical mannequin enter you verified regionally with PyTorch.

See Working Inference to be taught extra about on-device inference on Qualcomm AI Hub.

- Profile the mannequin on an actual machine.

Profiling measures the latency of the mannequin when run on a tool. It stories the minimal worth over 100 invocations of the mannequin to finest isolate mannequin inference time from different processes on the machine.

See Profiling Fashions to be taught extra about profiling on Qualcomm AI Hub.

- Deploy the compiled mannequin to your machine

Run the command beneath to obtain the compiled mannequin.

The compiled mannequin can be utilized along side the AI Hub pattern utility hosted right here. This utility makes use of the mannequin to run object detection on a Home windows laptop computer powered by Snapdragon that you’ve regionally.

Conclusion

Mannequin customization with your personal information by means of Amazon SageMaker—with over 250 fashions obtainable on SageMaker JumpStart—is an addition to the present options of Qualcomm AI Hub, which embody BYOM and entry to a rising library of over 100 pre-optimized fashions. Collectively, these options create a wealthy setting for builders aiming to construct and deploy custom-made on-device AI fashions throughout Snapdragon and Qualcomm platforms.

The collaboration between Amazon SageMaker and Qualcomm AI Hub will assist improve the consumer expertise and streamline machine studying workflows, enabling extra environment friendly mannequin improvement and deployment throughout any utility on the edge. With this effort, Qualcomm Applied sciences and AWS are empowering their customers to create extra customized, context-aware, and privacy-focused AI experiences.

To be taught extra, go to Qualcomm AI Hub and Amazon SageMaker. For queries and updates, be a part of the Qualcomm AI Hub neighborhood on Slack.

Snapdragon and Qualcomm branded merchandise are merchandise of Qualcomm Applied sciences, Inc. or its subsidiaries

In regards to the authors

Rodrigo Amaral at the moment serves because the Lead for Qualcomm AI Hub Advertising and marketing at Qualcomm Applied sciences, Inc. On this function, he spearheads go-to-market methods, product advertising and marketing, developer actions, with a concentrate on AI and ML with a concentrate on edge units. He brings virtually a decade of expertise in AI, complemented by a powerful background in enterprise. Rodrigo holds a BA in Enterprise and a Grasp’s diploma in Worldwide Administration.

Rodrigo Amaral at the moment serves because the Lead for Qualcomm AI Hub Advertising and marketing at Qualcomm Applied sciences, Inc. On this function, he spearheads go-to-market methods, product advertising and marketing, developer actions, with a concentrate on AI and ML with a concentrate on edge units. He brings virtually a decade of expertise in AI, complemented by a powerful background in enterprise. Rodrigo holds a BA in Enterprise and a Grasp’s diploma in Worldwide Administration.

Ashwin Murthy is a Machine Studying Engineer engaged on Qualcomm AI Hub. He works on including new fashions to the general public AI Hub Fashions assortment, with a particular concentrate on quantized fashions. He beforehand labored on machine studying at Meta and Groq.

Ashwin Murthy is a Machine Studying Engineer engaged on Qualcomm AI Hub. He works on including new fashions to the general public AI Hub Fashions assortment, with a particular concentrate on quantized fashions. He beforehand labored on machine studying at Meta and Groq.

Meghan Stronach is a PM on Qualcomm AI Hub. She works to assist our exterior neighborhood and prospects, delivering new options throughout Qualcomm AI Hub and enabling adoption of ML on machine. Born and raised within the Toronto space, she graduated from the College of Waterloo in Administration Engineering and has spent her time at firms of varied sizes.

Meghan Stronach is a PM on Qualcomm AI Hub. She works to assist our exterior neighborhood and prospects, delivering new options throughout Qualcomm AI Hub and enabling adoption of ML on machine. Born and raised within the Toronto space, she graduated from the College of Waterloo in Administration Engineering and has spent her time at firms of varied sizes.

Kanwaljit Khurmi is a Principal Generative AI/ML Options Architect at Amazon Net Providers. He works with AWS prospects to supply steering and technical help, serving to them enhance the worth of their options when utilizing AWS. Kanwaljit focuses on serving to prospects with containerized and machine studying purposes.

Kanwaljit Khurmi is a Principal Generative AI/ML Options Architect at Amazon Net Providers. He works with AWS prospects to supply steering and technical help, serving to them enhance the worth of their options when utilizing AWS. Kanwaljit focuses on serving to prospects with containerized and machine studying purposes.

Pranav Murthy is an AI/ML Specialist Options Architect at AWS. He focuses on serving to prospects construct, prepare, deploy and migrate machine studying (ML) workloads to SageMaker. He beforehand labored within the semiconductor business growing giant pc imaginative and prescient (CV) and pure language processing (NLP) fashions to enhance semiconductor processes utilizing state-of-the-art ML methods. In his free time, he enjoys enjoying chess and touring. You will discover Pranav on LinkedIn.

Pranav Murthy is an AI/ML Specialist Options Architect at AWS. He focuses on serving to prospects construct, prepare, deploy and migrate machine studying (ML) workloads to SageMaker. He beforehand labored within the semiconductor business growing giant pc imaginative and prescient (CV) and pure language processing (NLP) fashions to enhance semiconductor processes utilizing state-of-the-art ML methods. In his free time, he enjoys enjoying chess and touring. You will discover Pranav on LinkedIn.

Karan Jain is a Senior Machine Studying Specialist at AWS, the place he leads the worldwide Go-To-Market technique for Amazon SageMaker Inference. He helps prospects speed up their generative AI and ML journey on AWS by offering steering on deployment, cost-optimization, and GTM technique. He has led product, advertising and marketing, and enterprise improvement efforts throughout industries for over 10 years, and is captivated with mapping advanced service options to buyer options.

Karan Jain is a Senior Machine Studying Specialist at AWS, the place he leads the worldwide Go-To-Market technique for Amazon SageMaker Inference. He helps prospects speed up their generative AI and ML journey on AWS by offering steering on deployment, cost-optimization, and GTM technique. He has led product, advertising and marketing, and enterprise improvement efforts throughout industries for over 10 years, and is captivated with mapping advanced service options to buyer options.