At present, we’re happy to announce the overall availability (GA) of Amazon Bedrock Customized Mannequin Import. This function empowers clients to import and use their personalized fashions alongside current basis fashions (FMs) via a single, unified API. Whether or not leveraging fine-tuned fashions like Meta Llama, Mistral Mixtral, and IBM Granite, or creating proprietary fashions based mostly on fashionable open-source architectures, clients can now carry their customized fashions into Amazon Bedrock with out the overhead of managing infrastructure or mannequin lifecycle duties.

Amazon Bedrock is a completely managed service that provides a selection of high-performing FMs from main AI corporations like AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon via a single API, together with a broad set of capabilities to construct generative AI purposes with safety, privateness, and accountable AI. Amazon Bedrock presents a serverless expertise, so you may get began rapidly, privately customise FMs with your personal knowledge, and combine and deploy them into your purposes utilizing AWS instruments with out having to handle infrastructure.

With Amazon Bedrock Customized Mannequin Import, clients can entry their imported customized fashions on demand in a serverless method, releasing them from the complexities of deploying and scaling fashions themselves. They’re in a position to speed up generative AI software improvement through the use of native Amazon Bedrock instruments and options reminiscent of Information Bases, Guardrails, Brokers, and extra—all via a unified and constant developer expertise.

Advantages of Amazon Bedrock Customized Mannequin Import embody:

- Flexibility to make use of current fine-tuned fashions:Prospects can use their prior investments in mannequin customization by importing current personalized fashions into Amazon Bedrock with out the necessity to recreate or retrain them. This flexibility maximizes the worth of earlier efforts and accelerates software improvement.

- Integration with Amazon Bedrock Options: Imported customized fashions may be seamlessly built-in with the native instruments and options of Amazon Bedrock, reminiscent of Information Bases, Guardrails, Brokers, and Mannequin Analysis. This unified expertise permits builders to make use of the identical tooling and workflows throughout each base FMs and imported customized fashions.

- Serverless: Prospects can entry their imported customized fashions in an on-demand and serverless method. This eliminates the necessity to handle or scale underlying infrastructure, as Amazon Bedrock handles all these features. Prospects can give attention to creating generative AI purposes with out worrying about infrastructure administration or scalability points.

- Assist for fashionable mannequin architectures: Amazon Bedrock Customized Mannequin Import helps a wide range of fashionable mannequin architectures, together with Meta Llama 3.2, Mistral 7B, Mixtral 8x7B, and extra. Prospects can import customized weights in codecs like Hugging Face Safetensors from Amazon SageMaker and Amazon S3. This broad compatibility permits clients to work with fashions that finest swimsuit their particular wants and use instances, permitting for higher flexibility and selection in mannequin choice.

- Leverage Amazon Bedrock converse API: Amazon Customized Mannequin Import permits our clients to make use of their supported fine-tuned fashions with Amazon Bedrock Converse API which simplifies and unifies the entry to the fashions.

Getting began with Customized Mannequin Import

One of many vital necessities from our clients is the flexibility to customise fashions with their proprietary knowledge whereas retaining full possession and management over the tuned mannequin artifact and its deployment. Customization might be in type of area adaptation or instruction fine-tuning. Prospects have a large diploma of choices for fine-tuning fashions effectively and cheaply. Nonetheless, internet hosting fashions presents its personal distinctive set of challenges. Prospects are on the lookout for some key features, specifically:

- Utilizing the prevailing customization funding and fine-grained management over customization.

- Having a unified developer expertise when accessing customized fashions or base fashions via Amazon Bedrock’s API.

- Ease of deployment via a completely managed, serverless, service.

- Utilizing pay-as-you-go inference to reduce the prices of their generative AI workloads.

- Be backed by enterprise grade safety and privateness tooling.

Amazon Bedrock Customized Mannequin Import function seeks to handle these considerations. To carry your customized mannequin into the Amazon Bedrock ecosystem, that you must run an import job. The import job may be invoked utilizing the AWS Administration Console or via APIs. On this submit, we reveal the code for working the import mannequin course of via APIs. After the mannequin is imported, you possibly can invoke the mannequin through the use of the mannequin’s Amazon Useful resource Identify (ARN).

As of this writing, supported mannequin architectures right now embody Meta Llama (v.2, 3, 3.1, and three.2), Mistral 7B, Mixtral 8x7B, Flan and IBM Granite fashions like Granite 3B-Code, 8B-Code, 20B-Code and 34B-Code.

Just a few factors to concentrate on when importing your mannequin:

- Fashions should be serialized in Safetensors format.

- When you have a special format, you possibly can probably use Llama convert scripts or Mistral convert scripts to transform your mannequin to a supported format.

- The import course of expects a minimum of the next information:

.safetensors,json,tokenizer_config.json,tokenizer.json, andtokenizer.mannequin. - The precision for the mannequin weights supported is FP32, FP16, and BF16.

- For fine-tuning jobs that create adapters like

LoRA-PEFTadapters, the import course of expects the adapters to be merged into the principle base mannequin weight as described in Mannequin merging.

Importing a mannequin utilizing the Amazon Bedrock console

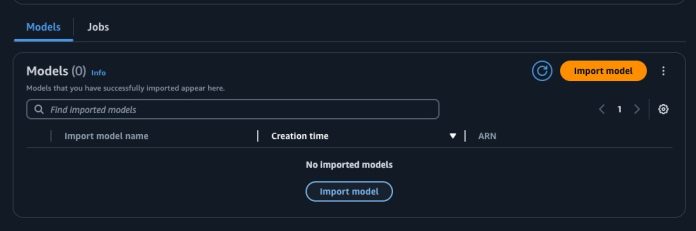

- Go to the Amazon Bedrock console and select Foundational fashions after which Imported fashions from the navigation pane on the left hand facet to get to the Fashions

- Click on on Import Mannequin to configure the import course of.

- Configure the mannequin.

- Enter the placement of your mannequin weights. These may be in Amazon S3 or level to a SageMaker Mannequin ARN object.

- Enter a Job title. We advocate this be suffixed with the model of the mannequin. As of now, that you must handle the generative AI operations features outdoors of this function.

- Configure your AWS Key Administration Service (AWS KMS) key for encryption. By default, this can default to a key owned and managed by AWS.

- Service entry function. You’ll be able to create a brand new function or use an current function which could have the required permissions to run the import course of. The permissions should embody entry to your Amazon S3 in the event you’re specifying mannequin weights via S3.

- After the Import Mannequin job is full, you will notice the mannequin and the mannequin ARN. Make an observation of the ARN to make use of later.

- Check the mannequin utilizing the on-demand function within the Textual content playground as you’d for any base foundations mannequin.

The import course of validates that the mannequin configuration complies with the desired structure for that mannequin by studying the config.json file and validates the mannequin structure values reminiscent of the utmost sequence size and different related particulars. It additionally checks that the mannequin weights are within the Safetensors format. This validation verifies that the imported mannequin meets the required necessities and is suitable with the system.

Advantageous tuning a Meta Llama Mannequin on SageMaker

Meta Llama 3.2 presents multi-modal imaginative and prescient and light-weight fashions, representing Meta’s newest advances in massive language fashions (LLMs). These new fashions present enhanced capabilities and broader applicability throughout numerous use instances. With a give attention to accountable innovation and system-level security, the Llama 3.2 fashions reveal state-of-the-art efficiency on a variety of business benchmarks and introduce options that will help you construct a brand new era of AI experiences.

SageMaker JumpStart gives FMs via two main interfaces: SageMaker Studio and the SageMaker Python SDK. This offers you a number of choices to find and use a whole bunch of fashions to your use case.

On this part, we’ll present you find out how to fine-tune the Llama 3.2 3B Instruct mannequin utilizing SageMaker JumpStart. We’ll additionally share the supported occasion sorts and context for the Llama 3.2 fashions obtainable in SageMaker JumpStart. Though not highlighted on this submit, you can even discover different Llama 3.2 Mannequin variants that may be fine-tuned utilizing SageMaker JumpStart.

Instruction fine-tuning

The textual content era mannequin may be instruction fine-tuned on any textual content knowledge, supplied that the information is within the anticipated format. The instruction fine-tuned mannequin may be additional deployed for inference. The coaching knowledge should be formatted in a JSON Strains (.jsonl) format, the place every line is a dictionary representing a single knowledge pattern. All coaching knowledge should be in a single folder, however may be saved in a number of JSON Strains information. The coaching folder can even include a template.json file describing the enter and output codecs.

Artificial dataset

For this use case, we’ll use a synthetically generated dataset named amazon10Ksynth.jsonl in an instruction-tuning format. This dataset accommodates roughly 200 entries designed for coaching and fine-tuning LLMs within the finance area.

The next is an instance of the information format:

Immediate template

Subsequent, we create a immediate template for utilizing the information in an instruction enter format for the coaching job (as a result of we’re instruction fine-tuning the mannequin on this instance), and for inferencing the deployed endpoint.

After the immediate template is created, add the ready dataset that can be used for fine-tuning to Amazon S3.

Advantageous-tuning the Meta Llama 3.2 3B mannequin

Now, we’ll fine-tune the Llama 3.2 3B mannequin on the monetary dataset. The fine-tuning scripts are based mostly on the scripts supplied by the Llama fine-tuning repository.

Importing a customized mannequin from SageMaker to Amazon Bedrock

On this part, we are going to use a Python SDK to create a mannequin import job, get the imported mannequin ID and at last generate inferences. You’ll be able to seek advice from the console screenshots within the earlier part for find out how to import a mannequin utilizing the Amazon Bedrock console.

Parameter and helper operate arrange

First, we’ll create a number of helper capabilities and arrange our parameters to create the import job. The import job is chargeable for accumulating and deploying the mannequin from SageMaker to Amazon Bedrock. That is executed through the use of the create_model_import_job operate.

Saved safetensors should be formatted in order that the Amazon S3 location is the top-level folder. The configuration information and safetensors can be saved as proven within the following determine.

Test the standing and get job ARN from the response:

After a couple of minutes, the mannequin can be imported, and the standing of the job may be checked utilizing get_model_import_job. The job ARN is then used to get the imported mannequin ARN, which we are going to use to generate inferences.

Producing inferences utilizing the imported customized mannequin

The mannequin may be invoked through the use of the invoke_model and converse APIs. The next is a assist operate that can be used to invoke and extract the generated textual content from the general output.

Context arrange and mannequin response

Lastly, we are able to use the customized mannequin. First, we format our inquiry to match the fined-tuned immediate construction. This can be sure that the responses generated carefully resemble the format used within the fine-tuning part and are extra aligned to our wants. To do that we use the template that we used to format the information used for fine-tuning. The context can be coming out of your RAG options like Amazon Bedrock Knowledgebases. For this instance, we take a pattern context and add to demo the idea:

The output will look just like:

After the mannequin has been fine-tuned and imported into Amazon Bedrock, you possibly can experiment by sending completely different units of enter questions and context to the mannequin to generate a response, as proven within the following instance:

Some factors to notice

This examples on this submit are to reveal Customized Mannequin Import and aren’t designed for use in manufacturing. As a result of the mannequin has been skilled on solely 200 samples of synthetically generated knowledge, it’s solely helpful for testing functions. You’d ideally have extra various datasets and extra samples with steady experimentation carried out utilizing hyperparameter tuning to your respective use case, thereby steering the mannequin to create a extra fascinating output. For this submit, be sure that the mannequin temperature parameter is about to 0 and max_tokens run time parameter is about to a decrease values reminiscent of 100–150 tokens so {that a} succinct response is generated. You’ll be able to experiment with different parameters to generate a fascinating end result. See Amazon Bedrock Recipes and GitHub for extra examples.

Greatest practices to contemplate:

This function brings vital benefits for internet hosting your fine-tuned fashions effectively. As we proceed to develop this function to fulfill our clients’ wants, there are a number of factors to concentrate on:

- Outline your take a look at suite and acceptance metrics earlier than beginning the journey. Automating this can assist to save lots of effort and time.

- Presently, the mannequin weights should be all-inclusive, together with the adapter weights. There are a number of strategies for merging the fashions and we advocate experimenting to find out the correct methodology. The Customized Mannequin Import function allows you to take a look at your mannequin on demand.

- When creating your import jobs, add versioning to the job title to assist rapidly monitor your fashions. Presently, we’re not providing mannequin versioning, and every import is a novel job and creates a novel mannequin.

- The precision supported for the mannequin weights is FP32, FP16, and BF16. Run assessments to validate that these will work to your use case.

- The utmost concurrency that you would be able to anticipate for every mannequin can be 16 per account. Increased concurrency requests will trigger the service to scale and enhance the variety of mannequin copies.

- The variety of mannequin copies energetic at any cut-off date can be obtainable via Amazon CloudWatch See Import a personalized mannequin to Amazon Bedrock for extra data.

- As of the penning this submit, we’re releasing this function within the US-EAST-1 and US-WEST-2 AWS Areas solely. We are going to proceed to launch to different Areas. Comply with Mannequin assist by AWS Area for updates.

- The default import quota for every account is three fashions. Should you want extra to your use instances, work along with your account groups to extend your account quota.

- The default throttling limits for this function for every account can be 100 invocations per second.

- You should use this pattern pocket book to efficiency take a look at your fashions imported by way of this function. This pocket book is mere reference and never designed to be an exhaustive testing. We are going to at all times advocate you to run your personal full efficiency testing alongside along with your finish to finish testing together with useful and analysis testing.

Now obtainable

Amazon Bedrock Customized Mannequin Import is usually obtainable right now in Amazon Bedrock within the US-East-1 (N. Virginia) and US-West-2 (Oregon) AWS Areas. See the full Area checklist for future updates. To study extra, see the Customized Mannequin Import product web page and pricing web page.

Give Customized Mannequin Import a attempt within the Amazon Bedrock console right now and ship suggestions to AWS re:Put up for Amazon Bedrock or via your normal AWS Assist contacts.

Concerning the authors

Paras Mehra is a Senior Product Supervisor at AWS. He’s centered on serving to construct Amazon SageMaker Coaching and Processing. In his spare time, Paras enjoys spending time together with his household and highway biking across the Bay Space.

Paras Mehra is a Senior Product Supervisor at AWS. He’s centered on serving to construct Amazon SageMaker Coaching and Processing. In his spare time, Paras enjoys spending time together with his household and highway biking across the Bay Space.

Jay Pillai is a Principal Options Architect at Amazon Internet Providers. On this function, he capabilities because the Lead Architect, serving to companions ideate, construct, and launch Companion Options. As an Data Know-how Chief, Jay makes a speciality of synthetic intelligence, generative AI, knowledge integration, enterprise intelligence, and person interface domains. He holds 23 years of intensive expertise working with a number of shoppers throughout provide chain, authorized applied sciences, actual property, monetary companies, insurance coverage, funds, and market analysis enterprise domains.

Jay Pillai is a Principal Options Architect at Amazon Internet Providers. On this function, he capabilities because the Lead Architect, serving to companions ideate, construct, and launch Companion Options. As an Data Know-how Chief, Jay makes a speciality of synthetic intelligence, generative AI, knowledge integration, enterprise intelligence, and person interface domains. He holds 23 years of intensive expertise working with a number of shoppers throughout provide chain, authorized applied sciences, actual property, monetary companies, insurance coverage, funds, and market analysis enterprise domains.

Shikhar Kwatra is a Sr. Companion Options Architect at Amazon Internet Providers, working with main World System Integrators. He has earned the title of one of many Youngest Indian Grasp Inventors with over 500 patents within the AI/ML and IoT domains. Shikhar aids in architecting, constructing, and sustaining cost-efficient, scalable cloud environments for the group, and assist the GSI companions in constructing strategic business options on AWS.

Shikhar Kwatra is a Sr. Companion Options Architect at Amazon Internet Providers, working with main World System Integrators. He has earned the title of one of many Youngest Indian Grasp Inventors with over 500 patents within the AI/ML and IoT domains. Shikhar aids in architecting, constructing, and sustaining cost-efficient, scalable cloud environments for the group, and assist the GSI companions in constructing strategic business options on AWS.

Claudio Mazzoni is a Sr GenAI Specialist Options Architect at AWS engaged on world class purposes guiding costumers via their implementation of GenAI to succeed in their objectives and enhance their enterprise outcomes. Exterior of labor Claudio enjoys spending time with household, working in his backyard and cooking Uruguayan meals.

Claudio Mazzoni is a Sr GenAI Specialist Options Architect at AWS engaged on world class purposes guiding costumers via their implementation of GenAI to succeed in their objectives and enhance their enterprise outcomes. Exterior of labor Claudio enjoys spending time with household, working in his backyard and cooking Uruguayan meals.

Yanyan Zhang is a Senior Generative AI Knowledge Scientist at Amazon Internet Providers, the place she has been engaged on cutting-edge AI/ML applied sciences as a Generative AI Specialist, serving to clients leverage GenAI to realize their desired outcomes. Yanyan graduated from Texas A&M College with a Ph.D. diploma in Electrical Engineering. Exterior of labor, she loves touring, figuring out and exploring new issues.

Yanyan Zhang is a Senior Generative AI Knowledge Scientist at Amazon Internet Providers, the place she has been engaged on cutting-edge AI/ML applied sciences as a Generative AI Specialist, serving to clients leverage GenAI to realize their desired outcomes. Yanyan graduated from Texas A&M College with a Ph.D. diploma in Electrical Engineering. Exterior of labor, she loves touring, figuring out and exploring new issues.

Simon Zamarin is an AI/ML Options Architect whose important focus helps clients extract worth from their knowledge property. In his spare time, Simon enjoys spending time with household, studying sci-fi, and dealing on numerous DIY home initiatives.

Simon Zamarin is an AI/ML Options Architect whose important focus helps clients extract worth from their knowledge property. In his spare time, Simon enjoys spending time with household, studying sci-fi, and dealing on numerous DIY home initiatives.

Rupinder Grewal is a Senior AI/ML Specialist Options Architect with AWS. He at the moment focuses on serving of fashions and MLOps on Amazon SageMaker. Previous to this function, he labored as a Machine Studying Engineer constructing and internet hosting fashions. Exterior of labor, he enjoys taking part in tennis and biking on mountain trails.

Rupinder Grewal is a Senior AI/ML Specialist Options Architect with AWS. He at the moment focuses on serving of fashions and MLOps on Amazon SageMaker. Previous to this function, he labored as a Machine Studying Engineer constructing and internet hosting fashions. Exterior of labor, he enjoys taking part in tennis and biking on mountain trails.