Lots of our clients are shifting from monolithic prompts with general-purpose fashions to specialised compound AI programs to attain the standard wanted for production-ready GenAI apps.

In July, we launched the Agent Framework and Agent Analysis, now utilized by many enterprises to construct agentic apps like Retrieval Augmented Era (RAG. At present, we’re excited to announce new options in Agent Framework that simplify the method of constructing brokers able to advanced reasoning and performing duties like opening help tickets, responding to emails, and making reservations. These capabilities embody:

- Connecting LLMs with structured and unstructured enterprise information by means of shareable and ruled AI instruments.

- Shortly experiment and consider brokers with the new playground expertise.

- Seamlessly transition from playground to manufacturing with the brand new one-click code technology choice.

- Repeatedly monitor and consider LLMs and brokers with AI Gateway and Agent Analysis integration.

With these updates, we’re making it simpler to construct and deploy high-quality AI brokers that securely work together together with your group’s programs and information.

Compound AI Techniques with Mosaic AI

Databricks Mosaic AI supplies a whole toolchain for governing, experimenting with, deploying, and bettering compound AI programs. This launch provides options that make it potential to create and deploy compound AI programs that use agentic patterns.

Centralized Governance of Instruments and Brokers with Unity Catalog

Nearly all agentic compound AI programs depend on AI instruments that reach LLM capabilities by performing duties like retrieving enterprise information, executing calculations, or interacting with different programs. A key problem is securely sharing and discovering AI instruments for reuse whereas managing entry management. Mosaic AI solves this through the use of UC Capabilities as instruments and leveraging Unity Catalog’s governance to stop unauthorized entry and streamline software discovery. This permits information, fashions, instruments, and brokers to be managed collectively inside Unity Catalog by means of a single interface.

Unity Catalog Instruments can be executed in a safe and scalable sandboxed surroundings, guaranteeing protected and environment friendly code execution. Customers can invoke these instruments inside Databricks (Playground and Agent Framework) or externally by way of the open-source UCFunctionToolkit, providing flexibility in how they host their orchestration logic.

Speedy Experimentation with AI Playground

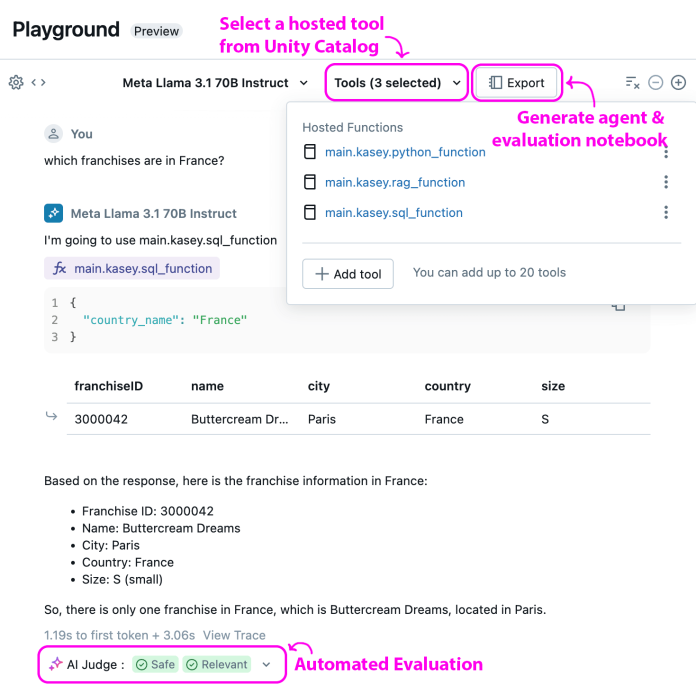

AI Playground now consists of new capabilities that allow speedy testing of compound AI programs by means of a single interactive interface. Customers can experiment with prompts, LLMs, instruments, and even deployed brokers. The brand new Device dropdown lets customers choose hosted instruments from Unity Catalog and examine totally different orchestrator fashions, like Llama 3.1-70B and GPT-4o (indicated by the “fx” icon), serving to determine the best-performing LLM for software interactions. Moreover, AI Playground highlights chain-of-thought reasoning within the output, making it simpler for customers to debug and confirm outcomes. This setup additionally permits for fast validation of software performance.

AI Playground now integrates with Mosaic AI Agent Analysis, offering deeper insights into agent or LLM high quality. Every agent-generated output is evaluated by LLM judges to generate high quality metrics, that are displayed inline. When expanded, the outcomes present the rationale behind every metric.

Straightforward Deployment of Brokers with Mannequin Serving

Mosaic AI platform now consists of new capabilities that present a quick path to deployment for Compound AI Techniques. AI Playground now has an Export button that auto-generates a Python notebooks. Customers can additional customise their brokers or deploy them as-is in mannequin serving, permitting for fast transition to manufacturing.

The auto-generated pocket book (1) integrates the LLM and instruments into an orchestration framework similar to Langgraph (we’re beginning with Langgraph however plan to help different frameworks sooner or later), and (2) logs all questions from the Playground session into an analysis dataset. It additionally automates efficiency analysis utilizing LLM judges from Agent Analysis. Under is an instance of the auto-generated pocket book:

The pocket book might be deployed with Mosaic AI Mannequin Serving, which now consists of computerized authentication to downstream instruments and dependencies. It additionally supplies request, response, and agent hint logging for real-time monitoring and analysis, enabling ops engineers to keep up high quality in manufacturing and builders to iterate and enhance brokers offline.

Collectively, these options allow seamless transition from experimentation to a production-ready agent.

Iterate on Manufacturing High quality with AI Gateway and Agent Analysis

Mosaic AI Gateway’s Inference Desk permits customers to seize incoming requests and outgoing responses from agent manufacturing endpoints right into a Unity Catalog Delta desk. When MLflow tracing is enabled, the Inference Desk additionally logs inputs and outputs for every part inside an agent. This information can then be used with current information instruments for evaluation and, when mixed with Agent Analysis, can monitor high quality, debug, and optimize agent-driven purposes.

What’s coming subsequent?

We’re engaged on a brand new function that allows basis mannequin endpoints in Mannequin Serving to combine enterprise information by choosing and executing instruments. You possibly can create customized instruments and use this functionality with any kind of LLMs, whether or not proprietary (similar to GPT-4o) or open fashions (similar to LLama-3.1-70B). For instance, the next single API name to the muse mannequin endpoint makes use of the LLM to course of the person’s query, retrieve the related climate information by working get_weather software, after which mix this data to generate the ultimate response.

shopper = OpenAI(api_key=DATABRICKS_TOKEN, base_url="https://XYZ.cloud.databricks.com/serving-endpoints")

response = shopper.chat.completions.create(

mannequin="databricks-meta-llama-3-1-70b-instruct",

messages=[{"role": "user", "content": "What’s the upcoming week’s weather for Seattle, and is it normal for this season?"}],

instruments=[{"type": "uc_function", "uc_function": {"name": "ml.tools.get_weather"}}]

)

print(response.selections[0].message.content material)A preview is already out there to pick out clients. To enroll, speak to your account group about becoming a member of the “Device Execution in Mannequin Serving” Personal Preview.

Get Began At present

Construct your individual Compound AI system immediately utilizing Databricks Mosaic AI. From speedy experimentation in AI Playground to straightforward deployment with Mannequin Serving to debugging with AI Gateway Inference Tables, Mosaic AI supplies instruments to help the whole lifecycle.

- Soar into AI Playground to shortly experiment and consider AI Brokers [AWS | Azure]

- Shortly construct Customized Brokers utilizing our AI Cookbook.

- Discuss to your account group about becoming a member of the “Device Execution in Mannequin Serving” Personal Preview.

- Don’t miss our digital occasion in October—an important alternative to be taught in regards to the compound AI programs our valued clients are constructing. Join right here.