Clients throughout all industries are experimenting with generative AI to speed up and enhance enterprise outcomes. Generative AI is utilized in numerous use circumstances, corresponding to content material creation, personalization, clever assistants, questions and solutions, summarization, automation, cost-efficiencies, productiveness enchancment assistants, customization, innovation, and extra.

Generative AI options usually use Retrieval Augmented Technology (RAG) architectures, which increase exterior information sources for enhancing content material high quality, context understanding, creativity, domain-adaptability, personalization, transparency, and explainability.

This submit dives deep into Amazon Bedrock Data Bases, which helps with the storage and retrieval of information in vector databases for RAG-based workflows, with the target to enhance giant language mannequin (LLM) responses for inference involving a corporation’s datasets.

Advantages of vector knowledge shops

A number of challenges come up when dealing with complicated eventualities coping with knowledge like knowledge volumes, multi-dimensionality, multi-modality, and different interfacing complexities. For instance:

- Information corresponding to photos, textual content, and audio have to be represented in a structured and environment friendly method

- Understanding the semantic similarity between knowledge factors is crucial in generative AI duties like pure language processing (NLP), picture recognition, and advice programs

- As the amount of information continues to develop quickly, scalability turns into a major problem

- Conventional databases might wrestle to effectively deal with the computational calls for of generative AI duties, corresponding to coaching complicated fashions or performing inference on giant datasets

- Generative AI functions often require looking out and retrieving comparable objects or patterns inside datasets, corresponding to discovering comparable photos or recommending related content material

- Generative AI options usually contain integrating a number of parts and applied sciences, corresponding to deep studying frameworks, knowledge processing pipelines, and deployment environments

Vector databases function a basis in addressing these knowledge wants for generative AI options, enabling environment friendly illustration, semantic understanding, scalability, interoperability, search and retrieval, and mannequin deployment. They contribute to the effectiveness and feasibility of generative AI functions throughout numerous domains. Vector databases supply the next capabilities:

- Present a method to characterize knowledge in a structured and environment friendly method, enabling computational processing and manipulation

- Allow the measurement of semantic similarity by encoding knowledge into vector representations, permitting for comparability and evaluation

- Deal with large-scale datasets effectively, enabling processing and evaluation of huge quantities of knowledge in a scalable method

- Present a typical interface for storing and accessing knowledge representations, facilitating interoperability between totally different parts of the AI system

- Help environment friendly search and retrieval operations, enabling fast and correct exploration of enormous datasets

To assist implement generative AI-based functions securely at scale, AWS gives Amazon Bedrock, a totally managed service that permits deploying generative AI functions that use high-performing LLMs from main AI startups and Amazon. With the Amazon Bedrock serverless expertise, you possibly can experiment with and consider high basis fashions (FMs) on your use circumstances, privately customise them along with your knowledge utilizing strategies corresponding to fine-tuning and RAG, and construct brokers that run duties utilizing enterprise programs and knowledge sources.

On this submit, we dive deep into the vector database choices accessible as a part of Amazon Bedrock Data Bases and the relevant use circumstances, and take a look at working code examples. Amazon Bedrock Data Bases permits sooner time to market by abstracting from the heavy lifting of constructing pipelines and offering you with an out-of-the-box RAG answer to scale back the construct time on your utility.

Data base programs with RAG

RAG optimizes LLM responses by referencing authoritative information bases outdoors of its coaching knowledge sources earlier than producing a response. Out of the field, LLMs are skilled on huge volumes of information and use billions of parameters to generate authentic output for duties like answering questions, translating languages, and finishing sentences. RAG extends the present highly effective capabilities of LLMs to particular domains or a corporation’s inside information base, all with out the necessity to retrain the mannequin. It’s a cheap strategy to enhancing an LLM’s output so it stays related, correct, and helpful in numerous contexts.

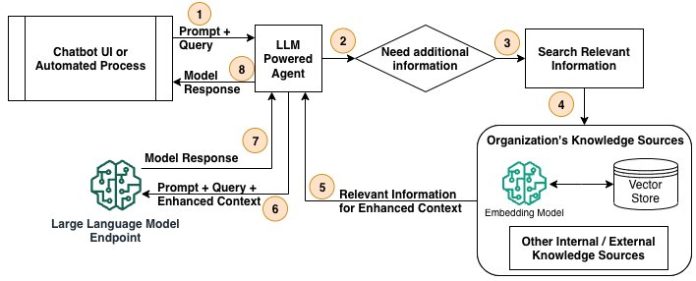

The next diagram depicts the high-level steps of a RAG course of to entry a corporation’s inside or exterior information shops and go the information to the LLM.

The workflow consists of the next steps:

- Both a person by means of a chatbot UI or an automatic course of points a immediate and requests a response from the LLM-based utility.

- An LLM-powered agent, which is liable for orchestrating steps to reply to the request, checks if further info is required from information sources.

- The agent decides which information supply to make use of.

- The agent invokes the method to retrieve info from the information supply.

- The related info (enhanced context) from the information supply is returned to the agent.

- The agent provides the improved context from the information supply to the immediate and passes it to the LLM endpoint for the response.

- The LLM response is handed again to the agent.

- The agent returns the LLM response to the chatbot UI or the automated course of.

Use circumstances for vector databases for RAG

Within the context of RAG architectures, the exterior information can come from relational databases, search and doc shops, or different knowledge shops. Nevertheless, merely storing and looking out by means of this exterior knowledge utilizing conventional strategies (corresponding to key phrase search or inverted indexes) will be inefficient and may not seize the true semantic relationships between knowledge factors. Vector databases are really useful for RAG use circumstances as a result of they allow similarity search and dense vector representations.

The next are some eventualities the place loading knowledge right into a vector database will be advantageous for RAG use circumstances:

- Massive information bases – When coping with in depth information bases containing thousands and thousands or billions of paperwork or passages, vector databases can present environment friendly similarity search capabilities.

- Unstructured or semi-structured knowledge – Vector databases are significantly well-suited for dealing with unstructured or semi-structured knowledge, corresponding to textual content paperwork, webpages, or pure language content material. By changing the textual knowledge into dense vector representations, vector databases can successfully seize the semantic relationships between paperwork or passages, enabling extra correct retrieval.

- Multilingual information bases – In RAG programs that must deal with information bases spanning a number of languages, vector databases will be advantageous. By utilizing multilingual language fashions or cross-lingual embeddings, vector databases can facilitate efficient retrieval throughout totally different languages, enabling cross-lingual information switch.

- Semantic search and relevance rating – Vector databases excel at semantic search and relevance rating duties. By representing paperwork or passages as dense vectors, the retrieval element can use vector similarity measures to determine essentially the most semantically related content material.

- Personalised and context-aware retrieval – Vector databases can assist personalised and context-aware retrieval in RAG programs. By incorporating person profiles, preferences, or contextual info into the vector representations, the retrieval element can prioritize and floor essentially the most related content material for a selected person or context.

Though vector databases supply benefits in these eventualities, their implementation and effectiveness might depend upon components corresponding to the particular vector embedding strategies used, the standard and illustration of the information, and the computational sources accessible for indexing and retrieval operations. With Amazon Bedrock Data Bases, you can provide FMs and brokers contextual info out of your firm’s non-public knowledge sources for RAG to ship extra related, correct, and customised responses.

Amazon Bedrock Data Bases with RAG

Amazon Bedrock Data Bases is a totally managed functionality that helps with the implementation of the whole RAG workflow, from ingestion to retrieval and immediate augmentation, with out having to construct customized integrations to knowledge sources and handle knowledge flows. Data bases are important for numerous use circumstances, corresponding to buyer assist, product documentation, inside information sharing, and decision-making programs. A RAG workflow with information bases has two predominant steps: knowledge preprocessing and runtime execution.

The next diagram illustrates the information preprocessing workflow.

As a part of preprocessing, info (structured knowledge, unstructured knowledge, or paperwork) from knowledge sources is first break up into manageable chunks. The chunks are transformed to embeddings utilizing embeddings fashions accessible in Amazon Bedrock. Lastly, the embeddings are written right into a vector database index whereas sustaining a mapping to the unique doc. These embeddings are used to find out semantic similarity between queries and textual content from the information sources. All these steps are managed by Amazon Bedrock.

The next diagram illustrates the workflow for the runtime execution.

In the course of the inference part of the LLM, when the agent determines that it wants further info, it reaches out to information bases. The method converts the person question into vector embeddings utilizing an Amazon Bedrock embeddings mannequin, queries the vector database index to seek out semantically comparable chunks to the person’s question, converts the retrieved chunks to textual content and augments the person question, after which responds again to the agent.

Embeddings fashions are wanted within the preprocessing part to retailer knowledge in vector databases and through the runtime execution part to generate embeddings for the person question to look the vector database index. Embeddings fashions map high-dimensional and sparse knowledge like textual content into dense vector representations to be effectively saved and processed by vector databases, and encode the semantic which means and relationships of information into the vector area to allow significant similarity searches. These fashions assist mapping totally different knowledge sorts like textual content, photos, audio, and video into the identical vector area to allow multi-modal queries and evaluation. Amazon Bedrock Data Bases gives industry-leading embeddings fashions to allow use circumstances corresponding to semantic search, RAG, classification, and clustering, to call a couple of, and gives multilingual assist as effectively.

Vector database choices with Amazon Bedrock Data Bases

On the time of penning this submit, Amazon Bedrock Data Bases gives 5 integration choices: the Vector Engine for Amazon OpenSearch Serverless, Amazon Aurora, MongoDB Atlas, Pinecone, and Redis Enterprise Cloud, with extra vector database choices to return. On this submit, we talk about use circumstances, options, and steps to arrange and retrieve info utilizing these vector databases. Amazon Bedrock makes it easy to undertake any of those selections by offering a typical set of APIs, industry-leading embedding fashions, safety, governance, and observability.

Function of metadata whereas indexing knowledge in vector databases

Metadata performs a vital position when loading paperwork right into a vector knowledge retailer in Amazon Bedrock. It gives further context and details about the paperwork, which can be utilized for numerous functions, corresponding to filtering, sorting, and enhancing search capabilities.

The next are some key makes use of of metadata when loading paperwork right into a vector knowledge retailer:

- Doc identification – Metadata can embody distinctive identifiers for every doc, corresponding to doc IDs, URLs, or file names. These identifiers can be utilized to uniquely reference and retrieve particular paperwork from the vector knowledge retailer.

- Content material categorization – Metadata can present details about the content material or class of a doc, corresponding to the subject material, area, or subject. This info can be utilized to prepare and filter paperwork based mostly on particular classes or domains.

- Doc attributes – Metadata can retailer further attributes associated to the doc, such because the creator, publication date, language, or different related info. These attributes can be utilized for filtering, sorting, or faceted search inside the vector knowledge retailer.

- Entry management – Metadata can embody details about entry permissions or safety ranges related to a doc. This info can be utilized to manage entry to delicate or restricted paperwork inside the vector knowledge retailer.

- Relevance scoring – Metadata can be utilized to reinforce the relevance scoring of search outcomes. For instance, if a person searches for paperwork inside a selected date vary or authored by a specific particular person, the metadata can be utilized to prioritize and rank essentially the most related paperwork.

- Information enrichment – Metadata can be utilized to counterpoint the vector representations of paperwork by incorporating further contextual info. This may probably enhance the accuracy and high quality of search outcomes.

- Information lineage and auditing – Metadata can present details about the provenance and lineage of paperwork, such because the supply system, knowledge ingestion pipeline, or different transformations utilized to the information. This info will be useful for knowledge governance, auditing, and compliance functions.

Stipulations

Full the steps on this part to arrange the prerequisite sources and configurations.

Configure Amazon SageMaker Studio

Step one is to arrange an Amazon SageMaker Studio pocket book to run the code for this submit. You may arrange the pocket book in any AWS Area the place Amazon Bedrock Data Bases is accessible.

- Full the conditions to arrange Amazon SageMaker.

- Full the fast setup or customized setup to allow your SageMaker Studio area and person profile.

You additionally want an AWS Id and Entry Administration (IAM) position assigned to the SageMaker Studio area. You may determine the position on the SageMaker console. On the Domains web page, open your area. The IAM position ARN is listed on the Area settings tab.

The position wants permissions for IAM, Amazon Relational Database Service (Amazon RDS), Amazon Bedrock, AWS Secrets and techniques Supervisor, Amazon Easy Storage Service (Amazon S3), and Amazon OpenSearch Serverless. - Modify the position permissions so as to add the next insurance policies:

IAMFullAccessAmazonRDSFullAccessAmazonBedrockFullAccessSecretsManagerReadWriteAmazonRDSDataFullAccessAmazonS3FullAccess- The next inline coverage:

- On the SageMaker console, select Studio within the navigation pane.

- Select your person profile and select Open Studio.

This may open a brand new browser tab for SageMaker Studio Traditional.

- Run the SageMaker Studio utility.

- When the applying is working, select Open.

JupyterLab will open in a brand new tab. - Obtain the pocket book file to make use of on this submit.

- Select the file icon within the navigation pane, then select the add icon, and add the pocket book file.

- Depart the picture, kernel, and occasion kind as default and select Choose.

Request Amazon Bedrock mannequin entry

Full the next steps to request entry to the embeddings mannequin in Amazon Bedrock:

- On the Amazon Bedrock console, select Mannequin entry within the navigation pane.

- Select Allow particular fashions.

- Choose the Titan Textual content Embeddings V2 mannequin.

- Select Subsequent and full the entry request.

Import dependencies

Open the pocket book file Bedrock_Knowledgebases_VectorDB.ipynb and run Step 1 to import dependencies for this submit and create Boto3 shoppers:

Create an S3 bucket

You should use the next code to create an S3 bucket to retailer the supply knowledge on your vector database, or use an current bucket. Should you create a brand new bucket, be sure to comply with your group’s finest practices and tips.

Arrange pattern knowledge

Use the next code to arrange the pattern knowledge for this submit, which would be the enter for the vector database:

Configure the IAM position for Amazon Bedrock

Use the next code to outline the perform to create the IAM position for Amazon Bedrock, and the features to connect insurance policies associated to Amazon OpenSearch Service and Aurora:

Use the next code to create the IAM position for Amazon Bedrock, which you’ll use whereas creating the information base:

Combine with OpenSearch Serverless

The Vector Engine for Amazon OpenSearch Serverless is an on-demand serverless configuration for OpenSearch Service. As a result of it’s serverless, it removes the operational complexities of provisioning, configuring, and tuning your OpenSearch clusters. With OpenSearch Serverless, you possibly can search and analyze a big quantity of information with out having to fret concerning the underlying infrastructure and knowledge administration.

The next diagram illustrates the OpenSearch Serverless structure. OpenSearch Serverless compute capability for knowledge ingestion, looking out, and querying is measured in OpenSearch Compute Items (OCUs).

The vector search assortment kind in OpenSearch Serverless gives a similarity search functionality that’s scalable and excessive performing. This makes it a well-liked choice for a vector database when utilizing Amazon Bedrock Data Bases, as a result of it makes it easy to construct trendy machine studying (ML) augmented search experiences and generative AI functions with out having to handle the underlying vector database infrastructure. Use circumstances for OpenSearch Serverless vector search collections embody picture searches, doc searches, music retrieval, product suggestions, video searches, location-based searches, fraud detection, and anomaly detection. The vector engine gives distance metrics corresponding to Euclidean distance, cosine similarity, and dot product similarity. You may retailer fields with numerous knowledge sorts for metadata, corresponding to numbers, Booleans, dates, key phrases, and geopoints. You can even retailer fields with textual content for descriptive info so as to add extra context to saved vectors. Collocating the information sorts reduces complexity, will increase maintainability, and avoids knowledge duplication, model compatibility challenges, and licensing points.

The next code snippets arrange an OpenSearch Serverless vector database and combine it with a information base in Amazon Bedrock:

- Create an OpenSearch Serverless vector assortment.

- Create an index within the assortment; this index can be managed by Amazon Bedrock Data Bases:

- Create a information base in Amazon Bedrock pointing to the OpenSearch Serverless vector assortment and index:

- Create a knowledge supply for the information base:

- Begin an ingestion job for the information base pointing to OpenSearch Serverless to generate vector embeddings for knowledge in Amazon S3:

Combine with Aurora pgvector

Aurora gives pgvector integration, which is an open supply extension for PostgreSQL that provides the power to retailer and search over ML-generated vector embeddings. This lets you use Aurora for generative AI RAG-based use circumstances by storing vectors with the remainder of the information. The next diagram illustrates the pattern structure.

Use circumstances for Aurora pgvector embody functions which have necessities for ACID compliance, point-in-time restoration, joins, and extra. The next is a pattern code snippet to configure Aurora along with your information base in Amazon Bedrock:

- Create an Aurora DB occasion (this code creates a managed DB occasion, however you possibly can create a serverless occasion as effectively). Determine the safety group ID and subnet IDs on your VPC earlier than working the next step and supply the suitable values within the

vpc_security_group_idsand SubnetIds variables: - On the Amazon RDS console, affirm the Aurora database standing reveals as Accessible.

- Create the vector extension, schema, and vector desk within the Aurora database:

- Create a information base in Amazon Bedrock pointing to the Aurora database and desk:

- Create a knowledge supply for the information base:

- Begin an ingestion job on your information base pointing to the Aurora pgvector desk to generate vector embeddings for knowledge in Amazon S3:

Combine with MongoDB Atlas

MongoDB Atlas Vector Search, when built-in with Amazon Bedrock, can function a strong and scalable information base to construct generative AI functions and implement RAG workflows. By utilizing the versatile doc knowledge mannequin of MongoDB Atlas, organizations can characterize and question complicated information entities and their relationships inside Amazon Bedrock. The mixture of MongoDB Atlas and Amazon Bedrock gives a robust answer for constructing and sustaining a centralized information repository.

To make use of MongoDB, you possibly can create a cluster and vector search index. The native vector search capabilities embedded in an operational database simplify constructing refined RAG implementations. MongoDB permits you to retailer, index, and question vector embeddings of your knowledge with out the necessity for a separate bolt-on vector database.

There are three pricing choices accessible for MongoDB Atlas by means of AWS Market: MongoDB Atlas (pay-as-you-go), MongoDB Atlas Enterprise, and MongoDB Atlas for Authorities. Seek advice from the MongoDB Atlas Vector Search documentation to arrange a MongoDB vector database and add it to your information base.

Combine with Pinecone

Pinecone is a sort of vector database from Pinecone Methods Inc. With Amazon Bedrock Data Bases, you possibly can combine your enterprise knowledge into Amazon Bedrock utilizing Pinecone because the absolutely managed vector database to construct generative AI functions. Pinecone is very performant; it might probably velocity by means of knowledge in milliseconds. You should use its metadata filters and sparse-dense index assist for top-notch relevance, attaining fast, correct, and grounded outcomes throughout various search duties. Pinecone is enterprise prepared; you possibly can launch and scale your AI answer while not having to keep up infrastructure, monitor companies, or troubleshoot algorithms. Pinecone adheres to the safety and operational necessities of enterprises.

There are two pricing choices accessible for Pinecone in AWS Market: Pinecone Vector Database – Pay As You Go Pricing (serverless) and Pinecone Vector Database – Annual Commit (managed). Seek advice from the Pinecone documentation to arrange a Pinecone vector database and add it to your information base.

Combine with Redis Enterprise Cloud

Redis Enterprise Cloud lets you arrange, handle, and scale a distributed in-memory knowledge retailer or cache setting within the cloud to assist functions meet low latency necessities. Vector search is among the answer choices accessible in Redis Enterprise Cloud, which solves for low latency use circumstances associated to RAG, semantic caching, doc search, and extra. Amazon Bedrock natively integrates with Redis Enterprise Cloud vector search.

There are two pricing choices accessible for Redis Enterprise Cloud by means of AWS Market: Redis Cloud Pay As You Go Pricing and Redis Cloud – Annual Commits. Seek advice from the Redis Enterprise Cloud documentation to arrange vector search and add it to your information base.

Work together with Amazon Bedrock information bases

Amazon Bedrock gives a typical set of APIs to work together with information bases:

- Retrieve API – Queries the information base and retrieves info from it. This can be a Bedrock Data Base particular API, it helps with use circumstances the place solely vector-based looking out of paperwork is required with out mannequin inferences.

- Retrieve and Generate API – Queries the information base and makes use of an LLM to generate responses based mostly on the retrieved outcomes.

The next code snippets present how you can use the Retrieve API from the OpenSearch Serverless vector database’s index and the Aurora pgvector desk:

- Retrieve knowledge from the OpenSearch Serverless vector database’s index:

- Retrieve knowledge from the Aurora pgvector desk:

Clear up

Whenever you’re performed with this answer, clear up the sources you created:

- Amazon Bedrock information bases for OpenSearch Serverless and Aurora

- OpenSearch Serverless assortment

- Aurora DB occasion

- S3 bucket

- SageMaker Studio area

- Amazon Bedrock service position

- SageMaker Studio area position

Conclusion

On this submit, we supplied a high-level introduction to generative AI use circumstances and using RAG workflows to enhance your group’s inside or exterior information shops. We mentioned the significance of vector databases and RAG architectures to allow similarity search and why dense vector representations are useful. We additionally went over Amazon Bedrock Data Bases, which gives frequent APIs, industry-leading governance, observability, and safety to allow vector databases utilizing totally different choices like AWS native and accomplice merchandise by means of AWS Market. We additionally dived deep into a couple of of the vector database choices with code examples to elucidate the implementation steps.

Check out the code examples on this submit to implement your personal RAG answer utilizing Amazon Bedrock Data Bases, and share your suggestions and questions within the feedback part.

Concerning the Authors

Vishwa Gupta is a Senior Information Architect with AWS Skilled Providers. He helps prospects implement generative AI, machine studying, and analytics options. Exterior of labor, he enjoys spending time with household, touring, and making an attempt new meals.

Vishwa Gupta is a Senior Information Architect with AWS Skilled Providers. He helps prospects implement generative AI, machine studying, and analytics options. Exterior of labor, he enjoys spending time with household, touring, and making an attempt new meals.

Isaac Privitera is a Principal Information Scientist with the AWS Generative AI Innovation Heart, the place he develops bespoke generative AI-based options to deal with prospects’ enterprise issues. His major focus lies in constructing accountable AI programs, utilizing strategies corresponding to RAG, multi-agent programs, and mannequin fine-tuning. When not immersed on this planet of AI, Isaac will be discovered on the golf course, having fun with a soccer sport, or mountain climbing trails along with his loyal canine companion, Barry.

Isaac Privitera is a Principal Information Scientist with the AWS Generative AI Innovation Heart, the place he develops bespoke generative AI-based options to deal with prospects’ enterprise issues. His major focus lies in constructing accountable AI programs, utilizing strategies corresponding to RAG, multi-agent programs, and mannequin fine-tuning. When not immersed on this planet of AI, Isaac will be discovered on the golf course, having fun with a soccer sport, or mountain climbing trails along with his loyal canine companion, Barry.

Abhishek Madan is a Senior GenAI Strategist with the AWS Generative AI Innovation Heart. He helps inside groups and prospects in scaling generative AI, machine studying, and analytics options. Exterior of labor, he enjoys taking part in journey sports activities and spending time with household.

Abhishek Madan is a Senior GenAI Strategist with the AWS Generative AI Innovation Heart. He helps inside groups and prospects in scaling generative AI, machine studying, and analytics options. Exterior of labor, he enjoys taking part in journey sports activities and spending time with household.

Ginni Malik is a Senior Information & ML Engineer with AWS Skilled Providers. She assists prospects by architecting enterprise knowledge lake and ML options to scale their knowledge analytics within the cloud.

Ginni Malik is a Senior Information & ML Engineer with AWS Skilled Providers. She assists prospects by architecting enterprise knowledge lake and ML options to scale their knowledge analytics within the cloud.

Satish Sarapuri is a Sr. Information Architect, Information Lake at AWS. He helps enterprise-level prospects construct high-performance, extremely accessible, cost-effective, resilient, and safe generative AI, knowledge mesh, knowledge lake, and analytics platform options on AWS by means of which prospects could make data-driven selections to achieve impactful outcomes for his or her enterprise, and helps them on their digital and knowledge transformation journey. In his spare time, he enjoys spending time along with his household and taking part in tennis.

Satish Sarapuri is a Sr. Information Architect, Information Lake at AWS. He helps enterprise-level prospects construct high-performance, extremely accessible, cost-effective, resilient, and safe generative AI, knowledge mesh, knowledge lake, and analytics platform options on AWS by means of which prospects could make data-driven selections to achieve impactful outcomes for his or her enterprise, and helps them on their digital and knowledge transformation journey. In his spare time, he enjoys spending time along with his household and taking part in tennis.