Public talking is a vital talent in right now’s world, whether or not it’s for skilled shows, tutorial settings, or private progress. By working towards it recurrently, people can construct confidence, handle nervousness in a wholesome method, and develop efficient communication abilities resulting in profitable public talking engagements. Now, with the arrival of enormous language fashions (LLMs), you need to use generative AI-powered digital assistants to supply real-time evaluation of speech, identification of areas for enchancment, and ideas for enhancing speech supply.

On this put up, we current an Amazon Bedrock powered digital assistant that may transcribe presentation audio and look at it for language use, grammatical errors, filler phrases, and repetition of phrases and sentences to supply suggestions in addition to counsel a curated model of the speech to raise the presentation. This resolution helps refine communication abilities and empower people to change into more practical and impactful public audio system. Organizations throughout numerous sectors, together with companies, instructional establishments, authorities entities, and social media personalities, can use this resolution to supply automated teaching for his or her staff, college students, and public talking engagements.

Within the following sections, we stroll you thru establishing a scalable, serverless, end-to-end Public Talking Mentor AI Assistant with Amazon Bedrock, Amazon Transcribe, and AWS Step Features utilizing supplied pattern code. Amazon Bedrock is a totally managed service that provides a alternative of high-performing basis fashions (FMs) from main AI firms like AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon via a single API, together with a broad set of capabilities to construct generative AI purposes with safety, privateness, and accountable AI.

Overview of resolution

The answer consists of 4 most important elements:

- An Amazon Cognito person pool for person authentication. Authenticated customers are granted entry to the Public Talking Mentor AI Assistant net portal to add audio and video recordings.

- A easy net portal created utilizing Streamlit to add audio and video recordings. The uploaded information are saved in an Amazon Easy Storage Service (Amazon S3) bucket for later processing, retrieval, and evaluation.

- A Step Features commonplace workflow to orchestrate changing the audio to textual content utilizing Amazon Transcribe after which invoking Amazon Bedrock with AI immediate chaining to generate speech suggestions and rewrite ideas.

- Amazon Easy Notification Service (Amazon SNS) to ship an e-mail notification to the person with Amazon Bedrock generated suggestions.

This resolution makes use of Amazon Transcribe for speech-to-text conversion. When an audio or video file is uploaded, Amazon Transcribe transcribes the speech into textual content. This textual content is handed as an enter to Anthropic’s Claude 3.5 Sonnet on Amazon Bedrock. The answer sends two prompts to Amazon Bedrock: one to generate suggestions and proposals on language utilization, grammar, filler phrases, repetition, and extra, and one other to acquire a curated model of the unique speech. Immediate chaining is carried out with Amazon Bedrock for these prompts. The answer then consolidates the outputs, shows suggestions on the person’s webpage, and emails the outcomes.

The generative AI capabilities of Amazon Bedrock effectively course of person speech inputs. It makes use of pure language processing to research the speech and gives tailor-made suggestions. Utilizing LLMs skilled on intensive knowledge, Amazon Bedrock generates curated speech outputs to reinforce the presentation supply.

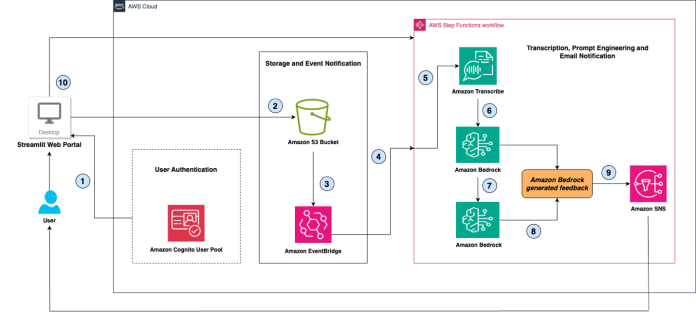

The next diagram exhibits our resolution structure.

Let’s discover the structure step-by-step:

- The person authenticates to the Public Talking Mentor AI Assistant net portal (a Streamlit utility hosted on person’s native desktop) utilizing the Amazon Cognito person pool authentication mechanism.

- The person uploads an audio or video file to the net portal, which is saved in an S3 bucket encrypted utilizing server-side encryption with Amazon S3 managed keys (SSE-S3).

- The S3 service triggers an

s3:ObjectCreatedoccasion for every file that’s saved to the bucket. - Amazon EventBridge invokes the Step Features state machine based mostly on this occasion. As a result of the state machine execution may exceed 5 minutes, we use a typical workflow. Step Features state machine logs are despatched to Amazon CloudWatch for logging and troubleshooting functions.

- The Step Features workflow makes use of AWS SDK integrations to invoke Amazon Transcribe and initiates a

StartTranscriptionJob, passing the S3 bucket, prefix path, and object title within theMediaFileUriThe workflow waits for the transcription job to finish and saves the transcript in one other S3 bucket prefix path. - The Step Features workflow makes use of the optimized integrations to invoke the Amazon Bedrock

InvokeModelAPI, which specifies the Anthropic Claude 3.5 Sonnet mannequin, the system immediate, most tokens, and the transcribed speech textual content as inputs to the API. The system immediate instructs the Anthropic Claude 3.5 Sonnet mannequin to supply ideas on enhance the speech by figuring out incorrect grammar, repetitions of phrases or content material, use of filler phrases, and different suggestions. - After receiving a response from Amazon Bedrock, the Step Features workflow makes use of immediate chaining to craft one other enter for Amazon Bedrock, incorporating the earlier transcribed speech and the mannequin’s earlier response, and requesting the mannequin to supply ideas for rewriting the speech.

- The workflow combines these outputs from Amazon Bedrock and crafts a message that’s displayed on the logged-in person’s webpage.

- The Step Features workflow invokes the Amazon SNS

Publishoptimized integration to ship an e-mail to the person with the Amazon Bedrock generated message. - The Streamlit utility queries Step Features to show output outcomes on the Amazon Cognito person’s webpage.

Conditions

For implementing the Public Talking Mentor AI Assistant resolution, it’s best to have the next conditions:

- An AWS account with adequate AWS Identification and Entry Administration (IAM) permissions for the next AWS companies to deploy the answer and run the Streamlit utility net portal:

-

- Amazon Bedrock

- AWS CloudFormation

- Amazon CloudWatch

- Amazon Cognito

- Amazon EventBridge

- Amazon Transcribe

- Amazon SNS

- Amazon S3

- AWS Step Features

- Mannequin entry enabled for Anthropic’s Claude 3.5 Sonnet on Amazon Bedrock in your required AWS Area.

- An area desktop surroundings with the AWS Command Line Interface (AWS CLI) put in, Python 3.8 or above, and the AWS Cloud Improvement Package (AWS CDK) for Python and Git put in.

- The AWS CLI arrange with essential AWS credentials and desired Area.

Deploy the Public Talking Mentor AI Assistant resolution

Full the next steps to deploy the Public Talking Mentor AI Assistant AWS infrastructure:

- Clone the repository to your native desktop surroundings with the next command:

- Change to the

applisting within the cloned repository: - Create a Python digital surroundings:

- Activate your digital surroundings:

- Set up the required dependencies:

- Optionally, synthesize the CloudFormation template utilizing the AWS CDK:

Chances are you’ll have to carry out a one-time AWS CDK bootstrapping utilizing the next command. See AWS CDK bootstrapping for extra particulars.

- Deploy the CloudFormation template in your AWS account and chosen Area:

After the AWS CDK is deployed efficiently, you may observe the steps within the subsequent part to create an Amazon Cognito person.

Create an Amazon Cognito person for authentication

Full the next steps to create a person within the Amazon Cognito person pool to entry the net portal. The person created doesn’t want AWS permissions.

- Register to the AWS Administration Console of your account and choose the Area on your deployment.

- On the Amazon Cognito console, select Person swimming pools within the navigation pane.

- Select the person pool created by the CloudFormation template. (The person pool title ought to have the prefix

PSMBUserPooladopted by a string of random characters as one phrase.) - Select Create person.

- Enter a person title and password, then select Create person.

Subscribe to an SNS subject for e-mail notifications

Full the next steps to subscribe to an SNS subject to obtain speech suggestion e-mail notifications:

- Register to the console of your account and choose the Area on your deployment.

- On the Amazon SNS console, select Subjects within the navigation pane.

- Select the subject created by the CloudFormation template. (The title of the subject ought to seem like

InfraStack-PublicSpeakingMentorAIAssistantTopicadopted by a string of random characters as one phrase.) - Select Create subscription.

- For Protocol, select E mail.

- For Endpoint, enter your e-mail deal with.

- Select Create subscription.

Run the Streamlit utility to entry the net portal

Full the next steps to run the Streamlit utility to entry the Public Talking Mentor AI Assistant net portal:

- Change the listing to

webappcontained in theapplisting: - Launch the Streamlit server on port 8080:

- Make be aware of the Streamlit utility URL for additional use. Relying in your surroundings setup, you would select one of many URLs out of three (Native, Community, or Exterior) supplied by Streamlit server’s operating course of.

- Ensure that incoming visitors on

port 8080is allowed in your native machine to entry the Streamlit utility URL.

Use the Public Talking Mentor AI Assistant

Full the next steps to make use of the Public Talking Mentor AI Assistant to enhance your speech:

- Open the Streamlit utility URL in your browser (Google Chrome, ideally) that you simply famous within the earlier steps.

- Log in to the net portal utilizing the Amazon Cognito person title and password created earlier for authentication.

- Select Browse information to find and select your recording.

- Select Add File to add your file to an S3 bucket.

As quickly because the file add finishes, the Public Talking Mentor AI Assistant processes the audio transcription and immediate engineering steps to generate speech suggestions and rewrite outcomes.

When the processing is full, you may see the Speech Suggestions and Speech Rewrite sections on the webpage in addition to in your e-mail via Amazon SNS notifications.

On the fitting pane of the webpage, you may evaluate the processing steps carried out by the Public Talking Mentor AI Assistant resolution to get your speech outcomes.

Clear up

Full the next steps to scrub up your assets:

- Shut down your Streamlit utility server course of operating in your surroundings utilizing Ctrl+C.

- Change to the

applisting in your repository. - Destroy the assets created with AWS CloudFormation utilizing the AWS CDK:

Optimize for performance, accuracy, and price

Let’s conduct an evaluation of this proposed resolution structure to determine alternatives for performance enhancements, accuracy enhancements, and price optimization.

Beginning with immediate engineering, our method entails analyzing customers’ speech based mostly on a number of standards, similar to language utilization, grammatical errors, filler phrases, and repetition of phrases and sentences. People and organizations have the pliability to customise the immediate by together with extra evaluation parameters or adjusting present ones to align with their necessities and firm insurance policies. Moreover, you may set the inference parameters to regulate the response from the LLM deployed on Amazon Bedrock.

To create a lean structure, now we have primarily chosen serverless applied sciences, similar to Amazon Bedrock for immediate engineering and pure language era, Amazon Transcribe for speech-to-text conversion, Amazon S3 for storage, Step Features for orchestration, EventBridge for scalable occasion dealing with to course of audio information, and Amazon SNS for e-mail notifications. Serverless applied sciences allow you to run the answer with out provisioning or managing servers, permitting for computerized scaling and pay-per-use billing, which may result in price financial savings and elevated agility.

For the net portal part, we’re presently deploying the Streamlit utility in an area desktop surroundings. Alternatively, you have got the choice to make use of Amazon S3 Web site Internet hosting, which might additional contribute to a serverless structure.

To boost the accuracy of audio-to-text translation, it’s advisable to document your presentation audio in a quiet surroundings, away from noise and distractions.

In circumstances the place your media incorporates domain-specific or non-standard phrases, similar to model names, acronyms, and technical phrases, Amazon Transcribe won’t precisely seize these phrases in your transcription output. To handle transcription inaccuracies and customise your output on your particular use case, you may create customized vocabularies and customized language fashions.

On the time of writing, our resolution analyzes solely the audio part. Importing audio information alone can optimize storage prices. Chances are you’ll contemplate changing your video information into audio utilizing third-party instruments previous to importing them to the Public Talking Mentor AI Assistant net portal.

Our resolution presently makes use of the usual tier of Amazon S3. Nonetheless, you have got the choice to decide on the S3 One Zone-IA storage class for storing information that don’t require excessive availability. Moreover, configuring an Amazon S3 lifecycle coverage can additional assist cut back prices.

You possibly can configure Amazon SNS to ship speech suggestions to different locations, similar to e-mail, webhook, and Slack. Consult with Configure Amazon SNS to ship messages for alerts to different locations for extra info.

To estimate the price of implementing the answer, you need to use the AWS Pricing Calculator. For bigger workloads, extra quantity reductions could also be out there. We suggest contacting AWS pricing specialists or your account supervisor for extra detailed pricing info.

Safety finest practices

Safety and compliance is a shared duty between AWS and the client, as outlined within the Shared Duty Mannequin. We encourage you to evaluate this mannequin for a complete understanding of the respective obligations. Consult with Safety in Amazon Bedrock and Construct generative AI purposes on Amazon Bedrock to be taught extra about constructing safe, compliant, and accountable generative AI purposes on Amazon Bedrock. OWASP Prime 10 For LLMs outlines the commonest vulnerabilities. We encourage you to allow Amazon Bedrock Guardrails to implement safeguards on your generative AI purposes based mostly in your use circumstances and accountable AI insurance policies.

With AWS, you handle the privateness controls of your knowledge, management how your knowledge is used, who has entry to it, and the way it’s encrypted. Consult with Knowledge Safety in Amazon Bedrock and Knowledge Safety in Amazon Transcribe for extra info. Equally, we strongly suggest referring to the information safety tips for every AWS service utilized in our resolution structure. Moreover, we advise making use of the precept of least privilege when granting permissions, as a result of this follow enhances the general safety of your implementation.

Conclusion

By harnessing the capabilities of LLMs in Amazon Bedrock, our Public Talking Mentor AI Assistant gives a revolutionary method to enhancing public talking skills. With its customized suggestions and constructive suggestions, people can develop efficient communication abilities in a supportive and non-judgmental surroundings.

Unlock your potential as a charming public speaker. Embrace the ability of our Public Talking Mentor AI Assistant and embark on a transformative journey in direction of mastering the artwork of public talking. Check out our resolution right now by cloning the GitHub repository and expertise the distinction our cutting-edge expertise could make in your private {and professional} progress.

In regards to the Authors

Nehal Sangoi is a Sr. Technical Account Supervisor at Amazon Net Companies. She gives strategic technical steering to assist unbiased software program distributors plan and construct options utilizing AWS finest practices. Join with Nehal on LinkedIn.

Nehal Sangoi is a Sr. Technical Account Supervisor at Amazon Net Companies. She gives strategic technical steering to assist unbiased software program distributors plan and construct options utilizing AWS finest practices. Join with Nehal on LinkedIn.

Akshay Singhal is a Sr. Technical Account Supervisor at Amazon Net Companies supporting Enterprise Help prospects specializing in the Safety ISV section. He gives technical steering for purchasers to implement AWS options, with experience spanning serverless architectures and price optimization. Exterior of labor, Akshay enjoys touring, Formulation 1, making quick motion pictures, and exploring new cuisines. Join with him on LinkedIn.

Akshay Singhal is a Sr. Technical Account Supervisor at Amazon Net Companies supporting Enterprise Help prospects specializing in the Safety ISV section. He gives technical steering for purchasers to implement AWS options, with experience spanning serverless architectures and price optimization. Exterior of labor, Akshay enjoys touring, Formulation 1, making quick motion pictures, and exploring new cuisines. Join with him on LinkedIn.