This put up is co-written with Much less Wright and Wei Feng from Meta

Pre-training massive language fashions (LLMs) is step one in creating highly effective AI methods that may perceive and generate human-like textual content. By exposing fashions to huge quantities of various information, pre-training lays the groundwork for LLMs to study common language patterns, world information, and reasoning capabilities. This foundational course of allows LLMs to carry out a variety of duties with out task-specific coaching, making them extremely versatile and adaptable. Pre-training is important for constructing a powerful base of data, which may then be refined and specialised by means of fine-tuning, switch studying, or few-shot studying approaches.

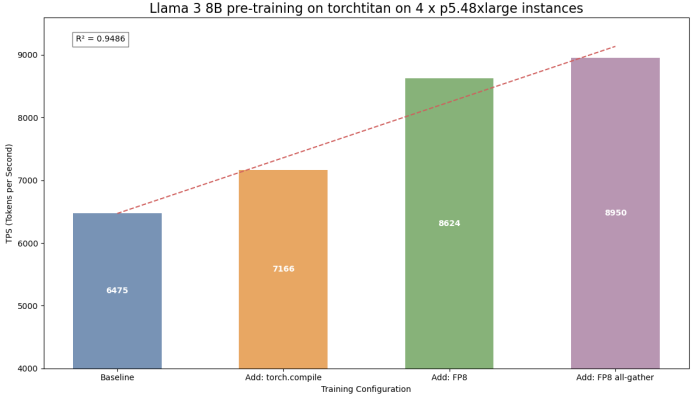

On this put up, we collaborate with the crew engaged on PyTorch at Meta to showcase how the torchtitan library accelerates and simplifies the pre-training of Meta Llama 3-like mannequin architectures. We showcase the important thing options and capabilities of torchtitan comparable to FSDP2, torch.compile integration, and FP8 assist that optimize the coaching effectivity. We pre-train a Meta Llama 3 8B mannequin structure utilizing torchtitan on Amazon SageMaker on p5.48xlarge situations, every geared up with 8 Nvidia H100 GPUs. We display a 38.23% efficiency speedup within the coaching throughput in comparison with the baseline with out making use of the optimizations (as proven within the following determine). Amazon SageMaker Mannequin Coaching reduces the time and price to coach and tune machine studying (ML) fashions at scale with out the necessity to handle infrastructure. You may reap the benefits of the highest-performing ML compute infrastructure at the moment obtainable, and SageMaker can mechanically scale infrastructure up or down, from one to 1000’s of GPUs.

To study extra, you will discover our full code pattern on GitHub.

Introduction to torchtitan

torchtitan is a reference structure for large-scale LLM coaching utilizing native PyTorch. It goals to showcase PyTorch’s newest distributed coaching options in a clear, minimal code base. The library is designed to be easy to grasp, use, and prolong for various coaching functions, with minimal adjustments required to the mannequin code when making use of numerous parallel processing strategies.

torchtitan presents a number of key options, together with FSDP2 with per-parameter sharding, tensor parallel processing, selective layer and operator activation checkpointing, and distributed checkpointing. It helps pre-training of Meta Llama 3-like and Llama 2-like mannequin architectures of varied sizes and contains configurations for a number of datasets. The library gives simple configuration by means of TOML recordsdata and presents efficiency monitoring by means of TensorBoard. Within the following sections, we spotlight a few of the key options of torchtitan.

Transitioning from FSDP1 to FSDP2

FSDP1 and FSDP2 are two approaches to completely sharded information parallel coaching. FSDP1 makes use of flat-parameter sharding, which flattens all parameters to 1D, concatenates them right into a single tensor, pads it, after which chunks it throughout staff. This technique presents bounded padding and environment friendly unsharded storage, however may not at all times enable optimum sharding for particular person parameters. FSDP2, then again, represents sharded parameters as DTensors sharded on dim-0, dealing with every parameter individually. This strategy allows simpler manipulation of parameters, for instance per-weight studying charge, communication-free sharded state dicts, and easier meta-device initialization. The transition from FSDP1 to FSDP2 displays a shift in direction of extra versatile and environment friendly parameter dealing with in distributed coaching, addressing limitations of the flat-parameter strategy whereas probably introducing new optimization alternatives.

torchtitan assist for torch.compile

torch.compile is a key characteristic in PyTorch that considerably boosts mannequin efficiency with minimal code adjustments. By its just-in-time (JIT) compilation, it analyzes and transforms PyTorch code into extra environment friendly kernels. torchtitan helps torch.compile, which delivers substantial speedups, particularly for big fashions and complicated architectures, by utilizing strategies like operator fusion, reminiscence planning, and automated kernel choice. That is enabled by setting compile = true within the mannequin’s TOML configuration file.

torchtitan assist for FP8 linear operations

torchtitan gives assist for FP8 (8-bit floating level) computation that considerably reduces reminiscence footprint and enhances efficiency in LLM coaching. FP8 has two codecs, E4M3 and E5M2, every optimized for various facets of coaching. E4M3 presents larger precision, making it very best for ahead propagation, whereas E5M2, with its bigger dynamic vary, is healthier suited to backpropagation. When working at a decrease precision, FP8 has no impression on mannequin accuracy, which we display by convergence comparisons of the Meta Llama 3 8B pre-training at 2,000 steps. FP8 assist on torchtitan is thru the torchao library, and we allow FP8 by setting enable_float8_linear = true within the mannequin’s TOML configuration file.

torchtitan assist for FP8 all-gather

This characteristic allows environment friendly communication of FP8 tensors throughout a number of GPUs, considerably decreasing community bandwidth in comparison with bfloat16 all-gather operations. FP8 all-gather performs float8 casting earlier than the all-gather operation, decreasing the message measurement. Key to its effectivity is the mixed absolute most (AMAX) AllReduce, which calculates AMAX for all float8 parameters in a single operation after the optimizer step, avoiding a number of small all-reduces. Much like FP8 assist, this additionally has no impression on mannequin accuracy, which we display by convergence comparisons of the Meta Llama 3 8B pre-training.

Pre-training Meta Llama 3 8B with torchtitan on Amazon SageMaker

SageMaker coaching jobs provide a number of key benefits that improve the pre-training technique of Meta Llama 3-like mannequin architectures with torchtitan. It gives a totally managed setting that simplifies large-scale distributed coaching throughout a number of situations, which is essential for effectively pre-training LLMs. SageMaker helps customized containers, which permits seamless integration of the torchtitan library and its dependencies, so all essential elements are available.

The built-in distributed coaching capabilities of SageMaker streamline the setup of multi-GPU and multi-node jobs, decreasing the complexity sometimes related to such configurations. Moreover, SageMaker integrates with TensorBoard, enabling real-time monitoring and visualization of coaching metrics and offering invaluable insights into the pre-training course of. With these options, researchers and practitioners can focus extra on mannequin improvement and optimization relatively than infrastructure administration, finally accelerating the iterative course of of making and refining customized LLMs.

Answer overview

Within the following sections, we stroll you thru the way to put together a customized picture with the torchtitan library, then configure a coaching job estimator operate to launch a Meta Llama 3 8B mannequin pre-training with the c4 dataset (Colossal Clear Crawled Corpus) on SageMaker. The c4 dataset is a large-scale internet textual content corpus that has been cleaned and filtered to take away low-quality content material. It’s regularly used for pre-training language fashions.

Stipulations

Earlier than you start, ensure you have the next necessities in place:

Construct the torchtitan customized picture

SageMaker BYOC (Deliver Your Personal Container) lets you use customized Docker containers to coach and deploy ML fashions. Usually, SageMaker gives built-in algorithms and preconfigured environments for common ML frameworks. Nonetheless, there could also be instances the place you’ve distinctive or proprietary algorithms, dependencies, or particular necessities that aren’t obtainable within the built-in choices, necessitating customized containers. On this case, we have to use the nightly variations of torch, torchdata, and the torchao package deal to coach with FP8 precision.

We use the Amazon SageMaker Studio Picture Construct comfort package deal, which presents a command line interface (CLI) to simplify the method of constructing customized container photographs straight from SageMaker Studio notebooks. This device eliminates the necessity for handbook setup of Docker construct environments, streamlining the workflow for information scientists and builders. The CLI mechanically manages the underlying AWS providers required for picture constructing, comparable to Amazon Easy Storage Service (Amazon S3), AWS CodeBuild, and Amazon Elastic Container Registry (Amazon ECR), permitting you to focus in your ML duties relatively than infrastructure setup. It presents a easy command interface, handles packaging of Dockerfiles and container code, and gives the ensuing picture URI to be used in SageMaker coaching and internet hosting.

Earlier than getting began, ensure your AWS Id and Entry Administration (IAM) execution position has the required IAM permissions and insurance policies to make use of the Picture Construct CLI. For extra data, see Utilizing the Amazon SageMaker Studio Picture Construct CLI to construct container photographs out of your Studio notebooks. We have now offered the Jupyter pocket book to construct the customized container within the GitHub repo.

Full the next steps to construct the customized picture:

- Set up the Picture Construct package deal with the next command:

- To increase the pre-built picture, you should use the included deep studying libraries and settings with out having to create a picture from scratch:

- Subsequent, specify the libraries to put in. You want the nightly variations of torch, torchdata, and the torchao libraries:

- Use the Picture Construct CLI to construct and push the picture to Amazon ECR:

!sm-docker construct --repository torchtitan:newest . You’re now prepared to make use of this picture for pre-training fashions with torchtitan in SageMaker.

Put together your dataset (optionally available)

By default, the torchtitan library makes use of the allenai/c4 “en” dataset in its coaching configuration. That is streamed straight throughout coaching utilizing the HuggingFaceDataset class. Nonetheless, you might wish to pre-train the Meta Llama 3 fashions by yourself dataset residing in Amazon S3. For this goal, we’ve ready a pattern Jupyter pocket book to obtain the allenai/c4 “en” dataset from the Hugging Face dataset hub to an S3 bucket. We use the SageMaker InputDataConfiguration to load the dataset to our coaching situations within the later part. You may obtain the dataset with a SageMaker processing job obtainable within the pattern Jupyter pocket book.

Launch your coaching with torchtitan

Full the next steps to launch your coaching:

- Import the mandatory SageMaker modules and retrieve your work setting particulars, comparable to AWS account ID and AWS Area. Ensure that to improve the SageMaker SDK to the most recent model. This may require a SageMaker Studio kernel restart.

- Clone the torchtitan repository and put together the coaching setting. Create a supply listing and transfer the mandatory dependencies from the torchtitan listing. This step makes certain you’ve all of the required recordsdata on your coaching course of.

- Use the next command to obtain the Meta Llama 3 tokenizer, which is important for preprocessing your dataset. Present your Hugging Face token.

One of many key benefits of torchtitan is its simple configuration by means of TOML recordsdata. We modify the Meta Llama-3-8b TOML configuration file to allow monitoring and optimization options.

- Allow TensorBoard profiling for higher insights into the coaching course of:

- Allow torch.compile for improved efficiency:

- Allow FP8 for extra environment friendly computations:

- Activate FP8 all-gather for optimized distributed coaching:

- To watch the coaching progress, arrange TensorBoard output. This lets you visualize the coaching metrics in actual time, offering invaluable insights into how the mannequin is studying.

- Arrange the info channels for SageMaker coaching. Create TrainingInput objects that time to the preprocessed dataset in Amazon S3, so your mannequin has entry to the coaching information it wants.

- Provoke the mannequin coaching on SageMaker:

estimator.match(inputs=data_channels)

Efficiency numbers

The next desk summarizes the efficiency numbers for the assorted coaching runs with completely different optimizations.

| Setup | Configuration | TOML Configuration |

Throughput (Tokens per Second) |

Speedup Over Baseline |

|

LLama3 – 8B pre-training on 4 x p5.48xlarge situations (32 NVIDIA H100 GPUs) |

Baseline | Default Configuration | 6475 | – |

| torch.compile | compile = true | 7166 | 10.67% | |

| FP8 linear |

compile = true enable_float8_linear = true |

8624 | 33.19% | |

| FP8 all-gather |

compile = true enable_float8_linear = true enable_fsdp_float8_all_gather= true precompute_float8_dynamic_scale_for_fsdp = true |

8950 | 38.23% |

The efficiency outcomes present clear optimization progress in Meta Llama 3 8B pre-training. torch.compile() delivered an 10.67% speedup, and FP8 linear operations tripled this to 33%. Including FP8 all-gather additional elevated the speedup to 38.23% over the baseline. This development demonstrates how combining optimization methods considerably enhances coaching effectivity.

The next determine illustrates the stepwise efficiency beneficial properties for Meta Llama 3 8B pre-training on torchtitan with the optimizations.

These optimizations didn’t have an effect on the mannequin’s coaching high quality. The loss curves for all optimization ranges, together with the baseline, torch.compile(), FP8 linear, and FP8 all-gather configurations, remained constant all through the coaching course of, as proven within the following determine.

The next desk showcases the constant loss worth with the completely different configurations.

| Configuration | Loss After 2,000 Steps |

| Baseline | 3.602 |

Plus torch.compile |

3.601 |

| Plus FP8 | 3.612 |

| Plus FP8 all-gather | 3.607 |

Clear up

After you full your coaching experiments, clear up your sources to keep away from pointless prices. You can begin by deleting any unused SageMaker Studio sources. Subsequent, take away the customized container picture from Amazon ECR by deleting the repository you created. If you happen to ran the optionally available step to make use of your individual dataset, delete the S3 bucket the place this information was saved.

Conclusion

On this put up, we demonstrated the way to effectively pre-train Meta Llama 3 fashions utilizing the torchtitan library on SageMaker. With torchtitan’s superior optimizations, together with torch.compile, FP8 linear operations, and FP8 all-gather, we achieved a 38.23% acceleration in Meta Llama 3 8B pre-training with out compromising the mannequin’s accuracy.

SageMaker simplified the large-scale coaching by providing seamless integration with customized containers, easy scaling throughout a number of situations, built-in assist for distributed coaching, and integration with TensorBoard for real-time monitoring.

Pre-training is a vital step in creating highly effective and adaptable LLMs that may successfully sort out a variety of duties and functions. By combining the most recent PyTorch distributed coaching options in torchtitan with the scalability and suppleness of SageMaker, organizations can use their proprietary information and area experience to create sturdy and high-performance AI fashions. Get began by visiting the GitHub repository for the full code instance and optimize your LLM pre-training workflow.

Particular thanks

Particular due to Gokul Nadathur (Engineering Supervisor at Meta), Gal Oshri (Principal Product Supervisor Technical at AWS) and Janosch Woschitz (Sr. ML Answer Architect at AWS) for his or her assist to the launch of this put up.

Concerning the Authors

Roy Allela is a Senior AI/ML Specialist Options Architect at AWS.He helps AWS prospects—from small startups to massive enterprises—practice and deploy basis fashions effectively on AWS. He is captivated with computational optimization issues and enhancing the efficiency of AI workloads.

Roy Allela is a Senior AI/ML Specialist Options Architect at AWS.He helps AWS prospects—from small startups to massive enterprises—practice and deploy basis fashions effectively on AWS. He is captivated with computational optimization issues and enhancing the efficiency of AI workloads.

Kanwaljit Khurmi is a Principal Options Architect at Amazon Net Providers. He works with AWS prospects to supply steering and technical help, serving to them enhance the worth of their options when utilizing AWS. Kanwaljit focuses on serving to prospects with containerized and machine studying functions.

Kanwaljit Khurmi is a Principal Options Architect at Amazon Net Providers. He works with AWS prospects to supply steering and technical help, serving to them enhance the worth of their options when utilizing AWS. Kanwaljit focuses on serving to prospects with containerized and machine studying functions.

Trevor Harvey is a Principal Specialist in Generative AI at Amazon Net Providers (AWS) and an AWS Licensed Options Architect – Skilled. He serves as a voting member of the PyTorch Basis Governing Board, the place he contributes to the strategic development of open-source deep studying frameworks. At AWS, Trevor works with prospects to design and implement machine studying options and leads go-to-market methods for generative AI providers.

Trevor Harvey is a Principal Specialist in Generative AI at Amazon Net Providers (AWS) and an AWS Licensed Options Architect – Skilled. He serves as a voting member of the PyTorch Basis Governing Board, the place he contributes to the strategic development of open-source deep studying frameworks. At AWS, Trevor works with prospects to design and implement machine studying options and leads go-to-market methods for generative AI providers.

Much less Wright is an AI/Associate Engineer in PyTorch. He works on Triton/CUDA kernels (Accelerating Dequant with SplitK work decomposition); paged, streaming, and quantized optimizers; and PyTorch Distributed (PyTorch FSDP).

Much less Wright is an AI/Associate Engineer in PyTorch. He works on Triton/CUDA kernels (Accelerating Dequant with SplitK work decomposition); paged, streaming, and quantized optimizers; and PyTorch Distributed (PyTorch FSDP).

Wei Feng is a Software program Engineer on the PyTorch distributed crew. He has labored on float8 all-gather for FSDP2, TP (Tensor Parallel) in TorchTitan, and 4-bit quantization for distributed QLoRA in TorchTune. He’s additionally a core maintainer of FSDP2.

Wei Feng is a Software program Engineer on the PyTorch distributed crew. He has labored on float8 all-gather for FSDP2, TP (Tensor Parallel) in TorchTitan, and 4-bit quantization for distributed QLoRA in TorchTune. He’s additionally a core maintainer of FSDP2.