This put up is co-written with Dean Metal and Simon Gatie from Aviva.

With a presence in 16 nations and serving over 33 million clients, Aviva is a number one insurance coverage firm headquartered in London, UK. With a historical past relationship again to 1696, Aviva is among the oldest and most established monetary companies organizations on the planet. Aviva’s mission is to assist folks defend what issues most to them—be it their well being, house, household, or monetary future. To attain this successfully, Aviva harnesses the ability of machine studying (ML) throughout greater than 70 use circumstances. Beforehand, ML fashions at Aviva have been developed utilizing a graphical UI-driven instrument and deployed manually. This method led to knowledge scientists spending greater than 50% of their time on operational duties, leaving little room for innovation, and posed challenges in monitoring mannequin efficiency in manufacturing.

On this put up, we describe how Aviva constructed a completely serverless MLOps platform primarily based on the AWS Enterprise MLOps Framework and Amazon SageMaker to combine DevOps finest practices into the ML lifecycle. This answer establishes MLOps practices to standardize mannequin improvement, streamline ML mannequin deployment, and supply constant monitoring. We illustrate your complete setup of the MLOps platform utilizing a real-world use case that Aviva has adopted as its first ML use case.

The Problem: Deploying and working ML fashions at scale

Roughly 47% of ML initiatives by no means attain manufacturing, in keeping with Gartner. Regardless of the developments in open supply knowledge science frameworks and cloud companies, deploying and working these fashions stays a big problem for organizations. This battle highlights the significance of creating constant processes, integrating efficient monitoring, and investing within the needed technical and cultural foundations for a profitable MLOps implementation.

For corporations like Aviva, which handles roughly 400,000 insurance coverage claims yearly, with expenditures of about £3 billion in settlements, the stress to ship a seamless digital expertise to clients is immense. To satisfy this demand amidst rising declare volumes, Aviva acknowledges the necessity for elevated automation via AI know-how. Due to this fact, creating and deploying extra ML fashions is essential to help their rising workload.

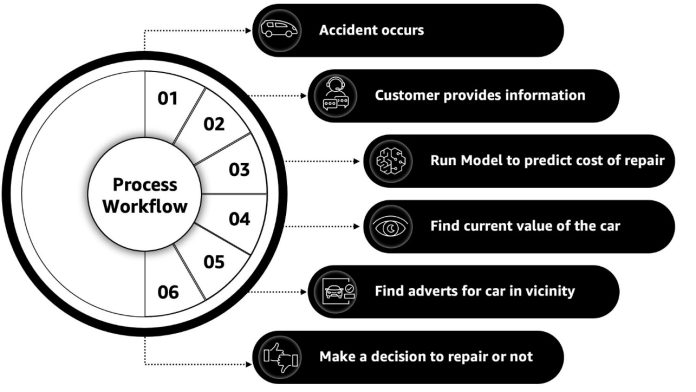

To show the platform can deal with onboarding and industrialization of ML fashions, Aviva picked their Treatment use case as their first mission. This use case considerations a declare administration system that employs a data-driven method to find out whether or not submitted automobile insurance coverage claims qualify as both whole loss or restore circumstances, as illustrated within the following diagram

- The workflow consists of the next steps:

- The workflow begins when a buyer experiences a automobile accident.

- The client contacts Aviva, offering details about the incident and particulars concerning the harm.

- To find out the estimated value of restore, 14 ML fashions and a set of enterprise guidelines are used to course of the request.

- The estimated value is in contrast with the automobile’s present market worth from exterior knowledge sources.

- Data associated to related vehicles on the market close by is included within the evaluation.

- Based mostly on the processed knowledge, a suggestion is made by the mannequin to both restore or write off the automobile. This suggestion, together with the supporting knowledge, is offered to the claims handler, and the pipeline reaches its remaining state.

The profitable deployment and analysis of the Treatment use case on the MLOps platform was supposed to function a blueprint for future use circumstances, offering most effectivity by utilizing templated options.

Answer overview of the MLOps platform

To deal with the complexity of operationalizing ML fashions at scale, AWS affords gives an MLOps providing referred to as AWS Enterprise MLOps Framework, which can be utilized for all kinds of use circumstances. The providing encapsulates a finest practices method to construct and handle MLOps platforms primarily based on the consolidated data gained from a large number of buyer engagements carried out by AWS Skilled Providers within the final 5 5 years. The proposed baseline structure could be logically divided into 4 constructing blocks which which are sequentially deployed into the offered AWS accounts, as illustrated within the following diagram beneath.

The constructing blocks are as follows:

- Networking – A digital personal cloud (VPC), subnets, safety teams, and VPC endpoints are deployed throughout all accounts.

- Amazon SageMaker Studio – SageMaker Studio affords a completely built-in ML built-in improvement surroundings (IDE) appearing as a knowledge science workbench and management panel for all ML workloads.

- Amazon SageMaker Initiatives templates – These ready-made infrastructure units cowl the ML lifecycle, together with steady integration and supply (CI/CD) pipelines and seed code. You may launch these from SageMaker Studio with a number of clicks, both selecting from preexisting templates or creating customized ones.

- Seed code – This refers back to the knowledge science code tailor-made for a particular use case, divided between two repositories: coaching (masking processing, coaching, and mannequin registration) and inference (associated to SageMaker endpoints). The vast majority of time in creating a use case needs to be devoted to modifying this code.

The framework implements the infrastructure deployment from a main governance account to separate improvement, staging, and manufacturing accounts. Builders can use the AWS Cloud Improvement Package (AWS CDK) to customise the answer to align with the corporate’s particular account setup. In adapting the AWS Enterprise MLOps Framework to a three-account construction, Aviva has designated accounts as follows: improvement, staging, and manufacturing. This construction is depicted within the following structure diagram. The governance elements, which facilitate mannequin promotions with constant processes throughout accounts, have been built-in into the event account.

Constructing reusable ML pipelines

The processing, coaching, and inference code for the Treatment use case was developed by Aviva’s knowledge science workforce in SageMaker Studio, a cloud-based surroundings designed for collaborative work and speedy experimentation. When experimentation is full, the ensuing seed code is pushed to an AWS CodeCommit repository, initiating the CI/CD pipeline for the development of a SageMaker pipeline. This pipeline contains a collection of interconnected steps for knowledge processing, mannequin coaching, parameter tuning, mannequin analysis, and the registration of the generated fashions within the Amazon SageMaker Mannequin Registry.

Amazon SageMaker Automated Mannequin Tuning enabled Aviva to make the most of superior tuning methods and overcome the complexities related to implementing parallelism and distributed computing. The preliminary step concerned a hyperparameter tuning course of (Bayesian optimization), throughout which roughly 100 mannequin variations have been skilled (5 steps with 20 fashions skilled concurrently in every step). This function integrates with Amazon SageMaker Experiments to supply knowledge scientists with insights into the tuning course of. The optimum mannequin is then evaluated when it comes to accuracy, and if it exceeds a use case-specific threshold, it’s registered within the SageMaker Mannequin Registry. A customized approval step was constructed, such that solely Aviva’s lead knowledge scientist can allow the deployment of a mannequin via a CI/CD pipeline to a SageMaker real-time inference endpoint within the improvement surroundings for additional testing and subsequent promotion to the staging and manufacturing surroundings.

Serverless workflow for orchestrating ML mannequin inference

To comprehend the precise enterprise worth of Aviva’s ML mannequin, it was essential to combine the inference logic with Aviva’s inner enterprise techniques. The inference workflow is liable for combining the mannequin predictions, exterior knowledge, and enterprise logic to generate a suggestion for claims handlers. The advice relies on three doable outcomes:

- Write off a automobile (anticipated repairs value exceeds the worth of the automobile)

- Search a restore (worth of the automobile exceeds restore value)

- Require additional investigation given a borderline estimation of the worth of injury and the worth for a alternative automobile

The next diagram illustrates the workflow.

The workflow begins with a request to an API endpoint hosted on Amazon API Gateway originating from a claims administration system, which invokes an AWS Step Features workflow that makes use of AWS Lambda to finish the next steps:

- The enter knowledge of the REST API request is reworked into encoded options, which is utilized by the ML mannequin.

- ML mannequin predictions are generated by feeding the enter to the SageMaker real-time inference endpoints. As a result of Aviva processes each day claims at irregular intervals, real-time inference endpoints assist overcome the problem of offering predictions constantly at low latency.

- ML mannequin predictions are additional processed by a customized enterprise logic to derive a remaining choice (of the three aforementioned choices).

- The ultimate choice, together with the generated knowledge, is consolidated and transmitted again to the claims administration system as a REST API response.

Monitor ML mannequin selections to raise confidence amongst customers

The power to acquire real-time entry to detailed knowledge for every state machine run and job is critically necessary for efficient oversight and enhancement of the system. This consists of offering declare handlers with complete particulars behind choice summaries, comparable to mannequin outputs, exterior API calls, and utilized enterprise logic, to ensure suggestions are primarily based on correct and full data. Snowflake is the popular knowledge platform, and it receives knowledge from Step Features state machine runs via Amazon CloudWatch logs. A collection of filters display screen for knowledge pertinent to the enterprise. This knowledge then transmits to an Amazon Knowledge Firehose supply stream and subsequently relays to an Amazon Easy Storage Service (Amazon S3) bucket, which is accessed by Snowflake. The information generated by all runs is utilized by Aviva enterprise analysts to create dashboards and administration experiences, facilitating insights comparable to month-to-month views of whole losses by area or common restore prices by automobile producer and mannequin.

Safety

The described answer processes personally identifiable data (PII), making buyer knowledge safety the core safety focus of the answer. The client knowledge is protected by using networking restrictions, as a result of processing is run contained in the VPC, the place knowledge is logically separated in transit. The information is encrypted in transit between steps of the processing and encrypted at relaxation utilizing AWS Key Administration Service (AWS KMS). Entry to the manufacturing buyer knowledge is restricted on a need-to-know foundation, the place solely the licensed events are allowed to entry manufacturing surroundings the place this knowledge resides.

The second safety focus of the answer is defending Aviva’s mental property. The code the information scientists and engineers are engaged on is saved securely within the dev AWS account, personal to Aviva, within the CodeCommit git repositories. The coaching knowledge and the artifacts of the skilled fashions are saved securely within the S3 buckets within the dev account, protected by AWS KMS encryption at relaxation, with AWS Identification and Entry Administration (IAM) insurance policies limiting entry to the buckets to solely the licensed SageMaker endpoints. The code pipelines are personal to the account as nicely, and reside within the buyer’s AWS surroundings.

The auditability of the workflows is offered by logging the steps of inference and decision-making within the CloudWatch logs. The logs are encrypted at relaxation as nicely with AWS KMS, and are configured with a lifecycle coverage, guaranteeing availability of audit data for the required compliance interval. To keep up safety of the mission and function it securely, the accounts are enabled with Amazon GuardDuty and AWS Config. AWS CloudTrail is used to observe the exercise inside the accounts. The software program to observe for safety vulnerabilities resides primarily within the Lambda capabilities implementing the enterprise workflows. The processing code is primarily written in Python utilizing libraries which are periodically up to date.

Conclusion

This put up offered an outline of the partnership between Aviva and AWS, which resulted within the development of a scalable MLOps platform. This platform was developed utilizing the open supply AWS Enterprise MLOps Framework, which built-in DevOps finest practices into the ML lifecycle. Aviva is now able to replicating constant processes and deploying a whole lot of ML use circumstances in weeks reasonably than months. Moreover, Aviva has transitioned solely to a pay-as-you-go mannequin, leading to a 90% discount in infrastructure prices in comparison with the corporate’s earlier on-premises ML platform answer.

Discover the AWS Enterprise MLOps Framework on GitHub and be taught extra about MLOps on Amazon SageMaker to see the way it can speed up your group’s MLOps journey.

Concerning the Authors

Dean Metal is a Senior MLOps Engineer at Aviva with a background in Knowledge Science and actuarial work. He’s captivated with all types of AI/ML with expertise creating and deploying a various vary of fashions for insurance-specific purposes, from giant transformers via to linear fashions. With an engineering focus, Dean is a robust advocate of mixing AI/ML with DevSecOps within the cloud utilizing AWS. In his spare time, Dean enjoys exploring music know-how, eating places and movie.

Dean Metal is a Senior MLOps Engineer at Aviva with a background in Knowledge Science and actuarial work. He’s captivated with all types of AI/ML with expertise creating and deploying a various vary of fashions for insurance-specific purposes, from giant transformers via to linear fashions. With an engineering focus, Dean is a robust advocate of mixing AI/ML with DevSecOps within the cloud utilizing AWS. In his spare time, Dean enjoys exploring music know-how, eating places and movie.

Simon Gatie, Precept Analytics Area Authority at Aviva in Norwich brings a various background in Physics, Accountancy, IT, and Knowledge Science to his position. He leads Machine Studying initiatives at Aviva, driving innovation in knowledge science and superior applied sciences for monetary companies.

Simon Gatie, Precept Analytics Area Authority at Aviva in Norwich brings a various background in Physics, Accountancy, IT, and Knowledge Science to his position. He leads Machine Studying initiatives at Aviva, driving innovation in knowledge science and superior applied sciences for monetary companies.

Gabriel Rodriguez is a Machine Studying Engineer at AWS Skilled Providers in Zurich. In his present position, he has helped clients obtain their enterprise objectives on quite a lot of ML use circumstances, starting from organising MLOps pipelines to creating a fraud detection software. Each time he’s not working, he enjoys doing bodily workout routines, listening to podcasts, or touring.

Gabriel Rodriguez is a Machine Studying Engineer at AWS Skilled Providers in Zurich. In his present position, he has helped clients obtain their enterprise objectives on quite a lot of ML use circumstances, starting from organising MLOps pipelines to creating a fraud detection software. Each time he’s not working, he enjoys doing bodily workout routines, listening to podcasts, or touring.

Marco Geiger is a Machine Studying Engineer at AWS Skilled Providers primarily based in Zurich. He works with clients from numerous industries to develop machine studying options that use the ability of information for attaining enterprise objectives and innovate on behalf of the shopper. In addition to work, Marco is a passionate hiker, mountain biker, soccer participant, and passion barista.

Marco Geiger is a Machine Studying Engineer at AWS Skilled Providers primarily based in Zurich. He works with clients from numerous industries to develop machine studying options that use the ability of information for attaining enterprise objectives and innovate on behalf of the shopper. In addition to work, Marco is a passionate hiker, mountain biker, soccer participant, and passion barista.

Andrew Odendaal is a Senior DevOps Marketing consultant at AWS Skilled Providers primarily based in Dubai. He works throughout a variety of consumers and industries to bridge the hole between software program and operations groups and gives steering and finest practices for senior administration when he’s not busy automating one thing. Outdoors of labor, Andrew is a household man that loves nothing greater than a binge-watching marathon with some good espresso on faucet.

Andrew Odendaal is a Senior DevOps Marketing consultant at AWS Skilled Providers primarily based in Dubai. He works throughout a variety of consumers and industries to bridge the hole between software program and operations groups and gives steering and finest practices for senior administration when he’s not busy automating one thing. Outdoors of labor, Andrew is a household man that loves nothing greater than a binge-watching marathon with some good espresso on faucet.