Many organizations are constructing generative AI purposes powered by giant language fashions (LLMs) to spice up productiveness and construct differentiated experiences. These LLMs are giant and sophisticated and deploying them requires highly effective computing assets and ends in excessive inference prices. For companies and researchers with restricted assets, the excessive inference prices of generative AI fashions is usually a barrier to enter the market, so extra environment friendly and cost-effective options are wanted. Most generative AI use circumstances contain human interplay, which requires AI accelerators that may ship actual time response charges with low latency. On the similar time, the tempo of innovation in generative AI is growing, and it’s changing into more difficult for builders and researchers to shortly consider and undertake new fashions to maintain tempo with the market.

One in all methods to get began with LLMs akin to Llama and Mistral are by utilizing Amazon Bedrock. Nevertheless, clients who need to deploy LLMs in their very own self-managed workflows for larger management and suppleness of underlying assets can use these LLMs optimized on prime of AWS Inferentia2-powered Amazon Elastic Compute Cloud (Amazon EC2) Inf2 situations. On this weblog submit, we’ll introduce use an Amazon EC2 Inf2 occasion to cost-effectively deploy a number of industry-leading LLMs on AWS Inferentia2, a purpose-built AWS AI chip, serving to clients to shortly check and open up an API interface to facilitate efficiency benchmarking and downstream utility calls on the similar time.

Mannequin introduction

There are a lot of well-liked open supply LLMs to select from, and for this weblog submit, we’ll evaluate three completely different use circumstances based mostly on mannequin experience utilizing Meta-Llama-3-8B-Instruct, Mistral-7B-instruct-v0.2, and CodeLlama-7b-instruct-hf.

| Mannequin identify | Launch firm | Variety of parameters | Launch time | Mannequin capabilities |

| Meta-Llama-3-8B-Instruct | Meta | 8 billion | April 2024 | Language understanding, translation, code era, inference, chat |

| Mistral-7B-Instruct-v0.2 | Mistral AI | 7.3 billion | March 2024 | Language understanding, translation, code era, inference, chat |

| CodeLlama-7b-Instruct-hf | Meta | 7 billion | August 2023 | Code era, code completion, chat |

Meta-Llama-3-8B-Instruct is a well-liked language fashions, launched by Meta AI in April 2024. The Llama 3 mannequin has improved pre-training, on the spot comprehension, output era, coding, inference, and math expertise. The Meta AI staff says that Llama 3 has the potential to be the initiator of a brand new wave of innovation in AI. The Llama 3 mannequin is on the market in two publicly launched variations, 8B and 70B. On the time of writing, Llama 3.1 instruction-tuned fashions can be found in 8B, 70B, and 405B variations. On this weblog submit, we’ll use the Meta-Llama-3-8B-Instruct mannequin, however the identical course of will be adopted for Llama 3.1 fashions.

Mistral-7B-instruct-v0.2, launched by Mistral AI in March 2024, marks a significant milestone within the growth of the publicly out there basis mannequin. With its spectacular efficiency, environment friendly structure, and wide selection of options, Mistral 7B v0.2 units a brand new customary for user-friendly and highly effective AI instruments. The mannequin excels at duties starting from pure language processing to coding, making it a useful useful resource for researchers, builders, and companies. On this weblog submit, we’ll use the Mistral-7B-instruct-v0.2 mannequin, however the identical course of will be adopted for the Mistral-7B-instruct-v0.3 mannequin.

CodeLlama-7b-instruct-hf is a set of fashions printed by Meta AI. It’s an LLM that makes use of textual content prompts to generate code. Code Llama is aimed toward code duties, making builders’ workflow quicker and extra environment friendly and reducing the training threshold for coders. Code Llama has the potential for use as a productiveness and academic instrument to assist programmers write extra highly effective and well-documented software program.

Resolution structure

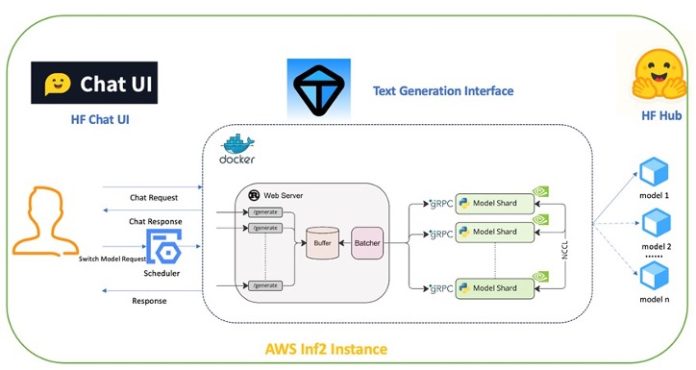

The answer makes use of a client-server structure, and the consumer makes use of the HuggingFace Chat UI to offer a chat web page that may be accessed on a PC or cellular system. Server-side mannequin inference makes use of Hugging Face’s Textual content Era Inference, an environment friendly LLM inference framework that runs in a Docker container. We pre-compiled the mannequin utilizing Hugging Face’s Optimum Neuron and uploaded the compilation outcomes to Hugging Face Hub. We’ve got additionally added a mannequin switching mechanism to the HuggingFace Chat UI to manage the loading of various fashions within the Textual content Era Inference container by means of a scheduler (Scheduler).

Resolution highlights

- All parts are deployed on an Inf2 occasion with a single chip occasion (inf2.xl or inf2.8xl), and customers can expertise the results of a number of fashions on one occasion.

- With the client-server structure, customers can flexibly change both the consumer or the server facet based on their precise wants. For instance, the mannequin will be deployed in Amazon SageMaker, and the frontend Chat UI will be deployed on the Node server. To facilitate the demonstration, we deployed each the back and front ends on the identical Inf2 server.

- Utilizing a publicly out there framework, customers can customise frontend pages or fashions based on their very own wants.

- Utilizing an API interface for Textual content Era Inference facilitates fast entry for customers utilizing the API.

- Deployment utilizing AWS Cloudformation, appropriate for all sorts of companies and builders throughout the enterprise.

Fundamental parts

The next are the principle parts of the answer.

Hugging Face Optimum Neuron

Optimum Neuron is an interface between the HuggingFace Transformers library and the AWS Neuron SDK. It supplies a set of instruments for mannequin load, coaching, and inference for single and a number of accelerator setups of various downstream duties. On this article, we primarily used Optimum Neuron’s export interface. To deploy the HuggingFace Transformers mannequin on Neuron units, the mannequin must be compiled and exported to a serialized format earlier than the inference is carried out. The export interface is pre-compiled (Forward of-time compilation (AOT)) utilizing the Neuron compiler (Neuronx-cc), and the mannequin is transformed right into a serialized and optimized TorchScript module. That is proven within the following determine.

Through the compilation course of, we launched a tensor parallelism mechanism to separate the weights, information, and computations between the 2 NeuronCores. For extra compilation parameters, see Export a mannequin to Inferentia.

Hugging Face’s Textual content Era Inference (TGI)

Textual content Era Inference (TGI) is a framework written in Rust and Python for deploying and serving LLMs. TGI supplies excessive efficiency textual content era providers for the most well-liked publicly out there basis LLMs. Its major options are:

- Easy launcher that gives inference providers for a lot of LLMs

- Helps each generate and stream interfaces

- Token stream utilizing server-sent occasions (SSE)

- Helps AWS Inferentia, Trainium, NVIDIA GPUs and different accelerators

HuggingFace Chat UI

HuggingFace Chat UI is an open-source chat instrument constructed by SvelteKit and will be deployed to Cloudflare, Netlify, Node, and so forth. It has the next major options:

- Web page will be personalized

- Dialog data will be saved, and chat data are saved in MongoDB

- Helps operation on PC and cellular terminals

- The backend can connect with Textual content Era Inference and helps API interfaces akin to Anthropic, Amazon SageMaker, and Cohere

- Suitable with varied publicly out there basis fashions (Llama sequence, Mistral/Mixtral sequence, Falcon, and so forth.

Because of the web page customization capabilities of the Hugging Chat UI, we’ve added a mannequin switching operate, so customers can change between completely different fashions on the identical EC2 Inf2 occasion.

Resolution deployment

- Earlier than deploying the answer, be sure you have an inf2.xl or inf2.8xl utilization quota within the us-east-1 (Virginia) or us-west-2 (Oregon) AWS Area. See the reference hyperlink for apply for a quota.

- Sign up to the AWS Administration Consol and change the Area to us-east-1 (Virginia) or us-west-2 (Oregon) within the higher proper nook of the console web page.

- Enter

Cloudformationwithin the service search field and select Create stack. - Choose Select an present template, after which choose Amazon S3 URL.

- Should you plan to make use of an present digital personal cloud (VPC), use the steps in a; in the event you plan to create a brand new VPC to deploy, use the steps in b.

- Use an present VPC.

- Enter

https://zz-common.s3.amazonaws.com/tmp/tgiui/20240501/launch_server_default_vpc_ubuntu22.04.yamlwithin the Amazon S3 URL. - Stack identify: Enter the stack identify.

- InstanceType: choose inf2.xl (decrease price) or inf2.8xl (higher efficiency).

- KeyPairName (non-obligatory): if you wish to sign up to the Inf2 occasion, enter the KeyPairName identify.

- VpcId: Choose VPC.

- PublicSubnetId: Choose a public subnet.

- VolumeSize: Enter the dimensions of the EC2 occasion EBS storage quantity. The minimal worth is 80 GB.

- Select Subsequent, then Subsequent once more. Select Submit.

- Enter

- Create a brand new VPC.

- Enter

https://zz-common.s3.amazonaws.com/tmp/tgiui/20240501/launch_server_new_vpc_ubuntu22.04.yamlwithin the Amazon S3 URL. - Stack identify: Enter the stack identify.

- InstanceType: Choose inf2.xl or inf2.8xl.

- KeyPairName (non-obligatory): If you wish to sign up to the Inf2 occasion, enter the KeyPairName identify.

- VpcId: Depart as New.

- PublicSubnetId: Depart as New.

- VolumeSize: Enter the dimensions of the EC2 occasion EBS storage quantity. The minimal worth is 80 GB.

- Enter

- Use an present VPC.

- Select Subsequent, after which Subsequent once more. Then select Submit.6. After creating the stack, look forward to the assets to be created and began (about quarter-hour). After the stack standing is displayed as

CREATE_COMPLETE, select Outputs. Select the URL the place the secret is the corresponding worth location for Public endpoint for the online server (shut all VPN connections and firewall applications).

Person interface

After the answer is deployed, customers can entry the previous URL on the PC or cell phone. On the web page, the Llama3-8B mannequin will likely be loaded by default. Customers can change fashions within the menu settings, choose the mannequin identify to be activated within the mannequin checklist, and select Activate to modify fashions. Switching fashions requires reloading the brand new mannequin into the Inferentia 2 accelerator reminiscence. This course of takes about 1 minute. Throughout this course of, customers can test the loading standing of the brand new mannequin by selecting Retrieve mannequin standing. If the standing is Obtainable, it signifies that the brand new mannequin has been efficiently loaded.

The results of the completely different fashions are proven within the following determine:

The next figures reveals the answer in a browser on a PC:

API interface and efficiency testing

The answer makes use of a Textual content Era Inference Inference Server, which helps /generate and /generate_stream interfaces and makes use of port 8080 by default. You can also make API calls by changing

The /generate interface is used to return all responses to the consumer without delay after producing all tokens on the server facet.

curl :8080/generate

-X POST

-d '{"inputs”: "Calculate the space from Beijing to Shanghai"}'

-H 'Content material-Kind: utility/json' /generate_stream is used to scale back ready delays and improve the consumer expertise by receiving tokens one after the other when the mannequin output size is comparatively giant.

curl :8080/generate_stream

-X POST

-d '{"inputs”: "Write an essay on the psychological well being of elementary faculty college students with not more than 300 phrases. "}'

-H 'Content material-Kind: utility/json' Here’s a pattern code to make use of requests interface in python.

import requests

url = "http://:8080/generate"

headers = {"Content material-Kind": "utility/json"}

information = {"inputs": "Calculate the space from Beijing to Shanghai","parameters":{

"max_new_tokens":200

}

}

response = requests.submit(url, headers=headers, json=information)

print(response.textual content)

Abstract

On this weblog submit, we launched strategies and examples of deploying well-liked LLMs on AWS AI chips, in order that customers can shortly expertise the productiveness enhancements offered by LLMs. The mannequin deployed on Inf2 occasion has been validated by a number of customers and situations, displaying sturdy efficiency and extensive applicability. AWS is repeatedly increasing its utility situations and options to offer customers with environment friendly and economical computing capabilities. See Inf2 Inference Efficiency to test the categories and checklist of fashions supported on the Inferentia2 chip. Contact us to provide suggestions in your wants or ask questions on deploying LLMs on AWS AI chips.

References

In regards to the authors

Zheng Zhang is a technical skilled for Amazon Internet Providers machine studying merchandise, concentrate on Amazon Internet Providers-based accelerated computing and GPU situations. He has wealthy experiences on large-scale mannequin coaching and inference acceleration in machine studying.

Zheng Zhang is a technical skilled for Amazon Internet Providers machine studying merchandise, concentrate on Amazon Internet Providers-based accelerated computing and GPU situations. He has wealthy experiences on large-scale mannequin coaching and inference acceleration in machine studying.

Bingyang Huang is a Go-To-Market Specialist of Accelerated Computing at GCR SSO GenAI staff. She has expertise on deploying the AI accelerator on buyer’s manufacturing atmosphere. Outdoors of labor, she enjoys watching movies and exploring good meals.

Bingyang Huang is a Go-To-Market Specialist of Accelerated Computing at GCR SSO GenAI staff. She has expertise on deploying the AI accelerator on buyer’s manufacturing atmosphere. Outdoors of labor, she enjoys watching movies and exploring good meals.

Tian Shi is Senior Resolution Architect at Amazon Internet Providers. He has wealthy expertise in cloud computing, information evaluation, and machine studying and is presently devoted to analysis and follow within the fields of knowledge science, machine studying, and serverless. His translations embrace Machine Studying as a Service, DevOps Practices Based mostly on Kubernetes, Sensible Kubernetes Microservices, Prometheus Monitoring Apply, and CoreDNS Examine Information within the Cloud Native Period.

Tian Shi is Senior Resolution Architect at Amazon Internet Providers. He has wealthy expertise in cloud computing, information evaluation, and machine studying and is presently devoted to analysis and follow within the fields of knowledge science, machine studying, and serverless. His translations embrace Machine Studying as a Service, DevOps Practices Based mostly on Kubernetes, Sensible Kubernetes Microservices, Prometheus Monitoring Apply, and CoreDNS Examine Information within the Cloud Native Period.

Chuan Xie is a Senior Resolution Architect at Amazon Internet Providers Generative AI, answerable for the design, implementation, and optimization of generative synthetic intelligence options based mostly on the Amazon Cloud. River has a few years of manufacturing and analysis expertise within the communications, ecommerce, web and different industries, and wealthy sensible expertise in information science, advice methods, LLM RAG, and others. He has a number of AI-related product know-how invention patents.

Chuan Xie is a Senior Resolution Architect at Amazon Internet Providers Generative AI, answerable for the design, implementation, and optimization of generative synthetic intelligence options based mostly on the Amazon Cloud. River has a few years of manufacturing and analysis expertise within the communications, ecommerce, web and different industries, and wealthy sensible expertise in information science, advice methods, LLM RAG, and others. He has a number of AI-related product know-how invention patents.